The symbolic systems approach and AI planning work great for applications that have a limited number of matching patterns; for example, a program that helps you complete your tax return. The IRS provides a limited number of forms and a collection of rules for reporting tax-relevant data. Combine the forms and instructions with the capability to crunch numbers and some heuristic reasoning, and you have a tax program that can step you through the process. With heuristic reasoning, introduced in the previous chapter, you can limit the number of patterns; for example, if you earned money from an employer, you complete a W-2 form. If you earned money as a sole proprietor, you complete Schedule C.

The limitation with this approach is that the database is difficult to manage, especially when rules and patterns change. For example, malware (viruses, spyware, computer worms and so forth) evolve too quickly for anti-malware companies to manually update their databases. Likewise, digital personal assistants, such as Siri and Alexa, need to constantly adapt to unfamiliar requests from their owners.

To overcome these limitations, early AI researchers started to wonder whether computers could be programmed to learn new patterns. Their curiosity led to the birth of machine learning — the science of getting computers to do things they weren't specifically programmed to do.

Machine learning got its start very shortly after the first AI conference. In 1959, AI researcher Arthur Samuel created a program that could play checkers. This program was different. It was designed to play against itself so it could learn how to improve. It learned new strategies from each game it played and after a short period of time began to consistently beat its own programmer.

A key advantage of machine learning is that it doesn't require an expert to create symbolic patterns and list out all the possible responses to a question or statement. On its own, the machine creates and maintains the list, identifying patterns and adding them to its database.

Imagine machine learning applied to the Chinese room experiment. The computer would observe the passing of notes between itself and the person outside the room. After examining thousands of exchanges, the computer identifies a pattern of communication and adds common words and phrases to its database. Now, it can use its collection of words and phrases to more quickly decipher the notes it receives and quickly assemble a response using these words and phrases instead of having to assemble a response from a collection of characters. It may even create its own dictionary based on these matching patterns, so it has a complete response to certain notes it receives.

Machine learning still qualifies as weak AI, because the computer doesn't understand what's being said; it only matches symbols and identifies patterns. The big difference is that instead of having an expert provide the patterns, the computer identifies patterns in the data. Over time, the computer becomes "smarter."

Machine learning has become one of the fastest growing areas in AI primarily because the cost of data storage and processing has dropped dramatically. We are currently in the era of data science and big data — extremely large data sets that can be computer analyzed to reveal patterns, trends and associations. Organizations are collecting vast amounts of data. The big challenge is to figure out what to do with all this data. Answering that challenge is machine learning, which can identify patterns even when you really don't know what you're looking for. In a sense, machine learning enables computers to find out what's inside your data and let you know what it found.

Machine learning moves past the limitations with symbolic systems. Instead of memorizing symbols a computer system uses machine learning algorithms to create models of abstract concepts. It detects statistical patterns by using machine learning algorithms on massive amounts of data.

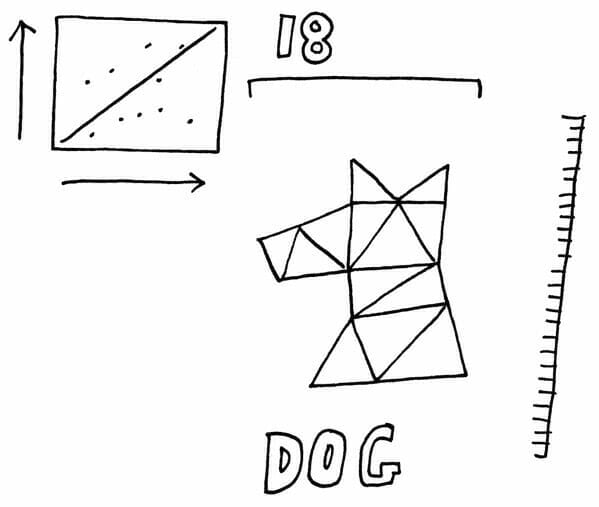

So a machine learning algorithm looks at the eight pictures of different dogs. Then it breaks down these pictures into individual dots or pixels. Then it looks at these pixels to detect patterns. Maybe it sees a pattern all of these animals as having hair. Maybe it sees a pattern for noses or ears. It could even see a pattern that humans are unable to perceive. Collectively, the patterns create what might be considered a statistical expression of “dogness.”

Sometimes humans can help machines learn. We can feed the machine millions of pictures that we’ve already determined contained dogs, so the machine doesn’t have to worry about excluding images of cats, horses or airplanes. This is called supervised learning, and the data, consisting of the label “dog” and the millions of pictures of dogs is called a training set. Using the training set, a human being is teaching the machine that all of the patterns it identifies are characteristics of “dog.”

Machines can also learn completely on their own. We just feed massive amounts of data into the machine and let it find its own patterns. This is called unsupervised learning.

Imagine a machine examining all the pictures of people on your smart phone. It might not know if someone was your husband, wife, boyfriend or girlfriend. But it could create clusters of people that it sees are closest to you.

Software developers have a popular saying, “Garbage in, garbage out.” They even have an acronym for it: GIGO. What is true for computer science is true for data science, as well, and perhaps even more so — if the data you’re analyzing is false, misleading, out of date, irrelevant, or insufficient, any decision based on that data is likely to be a poor decision.

The challenge of GIGO is compounded by the fact that data volume is growing exponentially. Big data is certainly valuable, but big data is accompanied by big garbage — inaccurate or irrelevant data that can contaminate the pools of data being analyzed. Big garbage creates a lot of “noise” that can result in misleading information and projections.

In this article, I discuss various ways to prevent big data from becoming big garbage.

More isn’t necessarily better when it comes to data. While you can’t always determine ahead of time which data is relevant and which isn’t, try to be selective when choosing data sets for analysis. Focus on key data. Including excess data is likely to cloud the analytics in addition to increasing demand on potentially limited resources, such as storage and compute.

Data warehouses tend to become cluttered with old data that may no longer be relevant. Your data team must decide whether to keep all the data or delete some of it. That decision isn’t always easy. Some analysts argue that storage (especially cloud storage) is inexpensive and that you never know when a volume of historical data will come in handy, so retaining all data is the best approach. Besides, buying more storage is probably cheaper and less aggravating than engaging in tiresome data retention meetings. Others argue that large volumes of data push the limits of the data warehouse and increase the chances of irrelevant data producing misleading reports, so deleting old data makes the most sense.

The only right decision regarding whether to retain or delete old data is the one that’s best for the organization and its business intelligence needs. You can always archive old data that isn’t likely to be useful and then delete it if nobody has used it in the past couple years. Whatever you choose to do with old data, you should have a strategy in place.

Many companies collect as much data as possible out of fear that they will fail to capture data that is later deemed essential. These companies often end up with unmanageable systems in which the integrity and hence the reliability of the data suffers.

I once worked for a company that fell into this common trap. They owned a website that connected potential car buyers with automobile dealerships. They created a tagging system that recorded all activity on the website. Whenever a customer pointed at or clicked on something, opened a new page, closed a page, or acted in any other way, the site recorded the event.

The system grew into thousands of tags. Each of these tags recorded millions of transactions. Only a few people in the company understood what each tag represented. They could tell the number of times someone interacted with a tagged object, but only a few people could figure out from the tag which object it was or how it related to the person who interacted with it.

They used the same tagging system for advertisements and videos posted on the site. They wanted to connect the tag to the image and the transaction, which allowed them to see the image that the customer clicked as well as data from the tag that indicated where the image was located on the page. All of this information was stored in an expanding Hadoop cluster. Unfortunately, the advertisements constantly changed, and the people in charge of tagging items started renaming the tags, so the integrity of the data being captured suffered.

The problem wasn’t that the organization was capturing too little or too much data but that it had no system in place for organizing and understanding that data.

To prevent big data from becoming big garbage, the most important precaution is to be sure that the data team is making conscious decisions and acting with intent. You don’t want a data policy that changes every few months. Decide in advance what you want to capture and save — what data is essential for the organization to achieve its business intelligence goals. Work with the team to make sure everyone agrees with and understands the policy. If you don’t have a set policy, you may corrupt all the data or just enough of it to destroy its integrity, and unreliable data can be worse than having no data at all.

You can’t have a discussion about big data or data science these days without Hadoop basics, but when you ask people what Hadoop is, they struggle to define it. I define Hadoop as a software framework for harnessing the power of distributed, parallel computing to store, process, and analyze large volumes of structured, semi-structured, and unstructured data.

Imagine having to write a 30 chapter book. You have two options — write it yourself or write the one chapter you know the most about and farm out the other 29 chapters to various experts on those topics. Which option would deliver the best book in the least amount of time? Obviously, distributing the work among 30 experts would expedite the process and result in a higher quality product. The same is true with distributed, parallel computing; instead of having one computer do all the work, you distribute tasks to dozens, hundreds, or even thousands of powerful “commodity” servers that perform the work as a collective. This group of servers is often referred to as a Hadoop cluster, and Hadoop coordinates their efforts.

Hadoop has three key features that differentiate it from traditional relational databases, which make it an attractive option for working with big data:

Hadoop has several advantages over traditional relational databases, including the following:

Hadoop certainly helps to overcome many of the challenges of big data, but it is not, in itself, an ideal solution. It is weak in the following areas:

Commercial versions of Hadoop are available to help overcome these and other limitations of the open source Apache Hadoop, including Amazon Web Services Elastic MapReduce, Cloudera, Hortonworks, and IBM InfoSphere BigInsights.

Big data is a term that describes an immense volume of diverse data typically analyzed to identify patterns, trends, and associations. However, the term “big data” didn’t start out that way. In 1997, NASA researchers Michael Cox and David Ellsworth described a “big data problem” they were struggling with. Their supercomputers were performing simulations of airflow around aircraft and generating massive volumes of data that couldn’t be processed or visualized effectively. The data were pushing the limits of their computer storage and processing, which was a problem — a big problem. In this context, the term “big data problem” was used more to describe a big problem than big data; NASA was facing a big, data problem, not so much a big-data problem.

A decade later, a McKinsey report entitled “Big data: The next frontier for innovation, competition, and productivity” reinforced the use of the term “big data” in the context of a problem that “leaders in every sector will have to grapple with.” The authors refer to big data as data that exceeds the capability of commonly used hardware and software.

Over time, defining big data has taken on a life and meaning of its own, beyond the context of a problem, to include the potential value of that data, as well. Now, big data poses both big problems and big opportunities.

Many organizations that start big-data projects don’t actually have big data. They may have a lot of data, but volume is just one criterion. These organizations may also mistakenly think that they have a big-data problem, because of the challenges they face in capturing, storing, and processing their data. However, data doesn’t constitute big data unless it meets the following criteria (also known as the four V’s):

Volume: In the world of big data, volume is no longer measured in megabytes and gigabytes but is now measured in terabytes, petabytes, exabytes, zettabytes, and yottabytes. Imagine the volume of data generated around the world every day by the over six billion smart phone users. Add to that Internet data and machine-generated data from the growing number of devices that comprise the Internet of Things (IoT), along with data from numerous other sources.

An interesting example of a big data problem is the challenge surrounding self-driving cars. To enable a self-driving car to safely navigate from point A to point B without running over pedestrians or crashing into objects, you would need to collect, process, and analyze a heavy stream of diverse data, including audio, video, traffic reports, GPS location data, and more, all flowing into the database in real time and at a high velocity. You would also need to evaluate which data is most reliable; for example, the historical data showing that the left lane is open to traffic, or the live video of a sign telling drivers to merge right. Is that person standing at the corner going to dart out in front of the car or wait for Walk signal? Whether the driver is human or the car is navigated by big data, a split-second decision is often required to prevent a serious accident. A driverless car would have to instantly process the video, audio, and traffic coordinates, and then “decide” what to do. That’s a big data problem.

Technology is evolving to solve most big data problems, and the cloud is playing a key role in this process. The cloud offers virtually unlimited storage and compute, so organizations no longer need to bump up against limitations in their on-premises data warehouses. In addition, business intelligence (BI) software is becoming increasingly sophisticated, enabling organizations to extract value from data without requiring a high level of technical expertise from users.

Still, many organizations struggle with data problems, both big and small. Some continue to struggle to meet storage and compute limitations simply because they are reluctant to move their on-premises data warehouses to the cloud. However, most organizations that struggle with data simply don’t know what to do with the data they have and the vast amounts of diverse data that are now readily available. Their problem with data is that they haven’t developed the culture of curiosity and innovation required to put all the data available to good use. In many ways, this shortcoming in organizations poses the real big data problem.