The symbolic systems approach and AI planning work great for applications that have a limited number of matching patterns; for example, a program that helps you complete your tax return. The IRS provides a limited number of forms and a collection of rules for reporting tax-relevant data. Combine the forms and instructions with the capability to crunch numbers and some heuristic reasoning, and you have a tax program that can step you through the process. With heuristic reasoning, introduced in the previous chapter, you can limit the number of patterns; for example, if you earned money from an employer, you complete a W-2 form. If you earned money as a sole proprietor, you complete Schedule C.

The limitation with this approach is that the database is difficult to manage, especially when rules and patterns change. For example, malware (viruses, spyware, computer worms and so forth) evolve too quickly for anti-malware companies to manually update their databases. Likewise, digital personal assistants, such as Siri and Alexa, need to constantly adapt to unfamiliar requests from their owners.

To overcome these limitations, early AI researchers started to wonder whether computers could be programmed to learn new patterns. Their curiosity led to the birth of machine learning — the science of getting computers to do things they weren't specifically programmed to do.

Machine learning got its start very shortly after the first AI conference. In 1959, AI researcher Arthur Samuel created a program that could play checkers. This program was different. It was designed to play against itself so it could learn how to improve. It learned new strategies from each game it played and after a short period of time began to consistently beat its own programmer.

A key advantage of machine learning is that it doesn't require an expert to create symbolic patterns and list out all the possible responses to a question or statement. On its own, the machine creates and maintains the list, identifying patterns and adding them to its database.

Imagine machine learning applied to the Chinese room experiment. The computer would observe the passing of notes between itself and the person outside the room. After examining thousands of exchanges, the computer identifies a pattern of communication and adds common words and phrases to its database. Now, it can use its collection of words and phrases to more quickly decipher the notes it receives and quickly assemble a response using these words and phrases instead of having to assemble a response from a collection of characters. It may even create its own dictionary based on these matching patterns, so it has a complete response to certain notes it receives.

Machine learning still qualifies as weak AI, because the computer doesn't understand what's being said; it only matches symbols and identifies patterns. The big difference is that instead of having an expert provide the patterns, the computer identifies patterns in the data. Over time, the computer becomes "smarter."

Machine learning has become one of the fastest growing areas in AI primarily because the cost of data storage and processing has dropped dramatically. We are currently in the era of data science and big data — extremely large data sets that can be computer analyzed to reveal patterns, trends and associations. Organizations are collecting vast amounts of data. The big challenge is to figure out what to do with all this data. Answering that challenge is machine learning, which can identify patterns even when you really don't know what you're looking for. In a sense, machine learning enables computers to find out what's inside your data and let you know what it found.

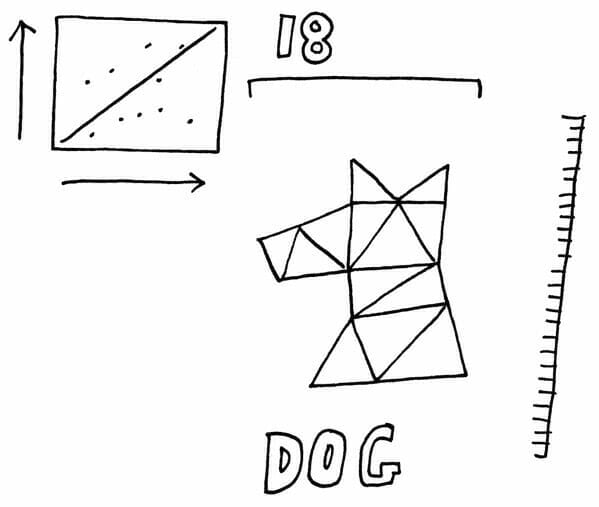

Machine learning moves past the limitations with symbolic systems. Instead of memorizing symbols a computer system uses machine learning algorithms to create models of abstract concepts. It detects statistical patterns by using machine learning algorithms on massive amounts of data.

So a machine learning algorithm looks at the eight pictures of different dogs. Then it breaks down these pictures into individual dots or pixels. Then it looks at these pixels to detect patterns. Maybe it sees a pattern all of these animals as having hair. Maybe it sees a pattern for noses or ears. It could even see a pattern that humans are unable to perceive. Collectively, the patterns create what might be considered a statistical expression of “dogness.”

Sometimes humans can help machines learn. We can feed the machine millions of pictures that we’ve already determined contained dogs, so the machine doesn’t have to worry about excluding images of cats, horses or airplanes. This is called supervised learning, and the data, consisting of the label “dog” and the millions of pictures of dogs is called a training set. Using the training set, a human being is teaching the machine that all of the patterns it identifies are characteristics of “dog.”

Machines can also learn completely on their own. We just feed massive amounts of data into the machine and let it find its own patterns. This is called unsupervised learning.

Imagine a machine examining all the pictures of people on your smart phone. It might not know if someone was your husband, wife, boyfriend or girlfriend. But it could create clusters of people that it sees are closest to you.

AI Planning Systems is a branch of Artificial Intelligence whose purpose is to identify strategies and action sequences that will, with a reasonable degree of confidence, enable the AI program to deliver the correct answer, solution, or outcome.

As I explained in a previous article Solve General Problems with Artificial Intelligence, one of the limitations of early AI, which was based on the physical symbol system hypothesis (PSSH), is combinatorial explosion — a mathematical phenomenon in which the number of possible combinations increases beyond the computer's capability to explore all of them in a reasonable amount of time.

AI planning attempts to solve the problem of combinatorial explosion by using something called heuristic reasoning — an approach that attempts to give artificial intelligence a form of common sense. Heuristic reasoning enables an AI program to rule out a large number of possible combinations by identifying them as impossible or highly unlikely. This approach is sometimes referred to as "limiting the search space."

A heuristic is a mental shortcut or rule-of-thumb that enables people to solve problems and make decisions quickly. For example, the Rule of 72 is a heuristic for estimating the number of years it would take an investment to double your money. You divide 72 by the rate of return, so an investment with a 6% rate of return would double your money in about 72/6 = 12 years.

Heuristic reasoning is common in innovation. Inventors rarely consider all the possibilities for solving a particular problem. Instead, they start with an idea, a hypothesis, or a hunch based on their knowledge and prior experience, then they start experimenting and exploring from that point forward. If they were to consider all the possibilities, they would waste considerable time, effort, energy, and expertise on futile experiments and research.

With AI planning, you might combine heuristic reasoning with a physical symbol system to improve performance. For example, imagine heuristic reasoning applied to the Chinese room experiment I introduced in my previous post on the general problem solver.

In the Chinese room scenario, you, an English-only speaker, are locked in a room with a narrow slot on the door through which notes can pass. You have a book filled with long lists of statements in Chinese, and the floor is covered in Chinese characters. You are instructed that upon receiving a certain sequence of Chinese characters, you are to look up a corresponding response in the book and, using the characters strewn about the floor, formulate your response.

What you do in the Chinese room is very similar to how AI programs work. They simply identify patterns, look up entries in a database that correspond to those patterns, and output the entries in response.

With the addition of heuristic reasoning, AI could limit the possibilities of the first note. For example, you could program the software to expect a message such as "Hello" or "How are you?” In effect, this would limit the search space, so that the AI program had to search only a limited number of records in its database to find an appropriate response. It wouldn't get bogged down searching the entire database to consider all possible messages and responses.

The only drawback is that if the first message was not one of those that was anticipated, the AI program would need to search its entire database.

Heuristic reasoning is commonly employed in modern AI applications. For example, if you enter your location and destination in a GPS app, the app doesn't search its vast database of source data, which consists of satellite and aerial imagery; state, city, and county maps; the US Geological Survey; traffic data; and so on. Instead, it limits the search space to the area that encompasses the location and destination you entered. In addition, it limits the output to the fastest or shortest route (not both) depending on which setting is in force, and it likely omits a great deal of detail from its maps to further expedite the process.

The goal is to deliver an accurate map and directions, in a reasonable amount of time, that lead you from your current location to your desired destination as quickly as possible. Without the shortcuts to the process provided by heuristic reasoning, the resulting combinatorial explosion would leave you waiting for directions... possibly for the rest of your life.

Even though many of the modern AI applications are built on what are now considered old-fashioned methods, AI planning allows for the intelligent combination of these methods, along with newer methods, to build AI applications that deliver the desired output. The resulting applications can certainly make computers appear to be intelligent beings — providing real-time guidance from point A to point B, analyzing contracts, automating logistics, and even building better video games.

If you're considering a new AI project, don't be quick to dismiss the benefits of good old-fashioned AI (GOFAI). Newer approaches may not be the right fit.

In one of my previous posts "The General Problem Solver," I discuss the debate over whether a physical symbol system is necessary and sufficient for intelligence. The developers of one of the early AI programs were convinced it did, but philosopher John Searle presented his Chinese room argument as a rebuttal to this theory. Searle concluded that a machine's ability to store and match symbols (patterns) to find the answers to questions or to solve problems does not constitute intelligence. Instead, intelligence requires an understanding of the question or problem, which a machine does not have.

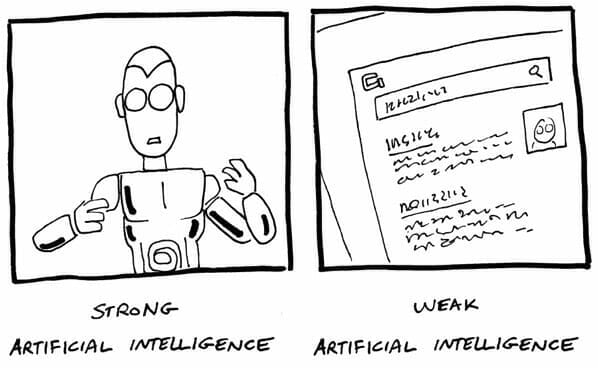

Searle went on to distinguish between two types of artificial "intelligence" — weak AI and strong AI:

● Weak AI is confined to a very narrow task, such as Netflix recommending movies or TV shows based on a customer's viewing history, finding a website that is likely to contain the content a user is looking for, or analyzing loan applications and determining whether a candidate should be approved or rejected. A weak AI program does not understand questions, experience emotions, care about anything or anyone, or learn for the sake of learning. It merely performs whatever task it was designed to do.

● Strong AI exhibits human characteristics, such as emotions, understanding, wisdom, and creativity. Examples of strong AI come from science fiction—HAL (the Heuristically programmed ALgorithmic computer) that controls the spaceship in 2001: A Space Odyssey, Lieutenant Commander Data from Star Trek, and C3PO and R2-D2 from Star Wars. These mechanical, computerized beings have emotions, self-awareness, and even a sense of humor. They are machines capable of learning new tasks — not limited to the tasks they have been programmed to perform.

Just Getting Started

Contrary to what science fiction writers want us to believe and the dark warnings in the media about intelligent robots eventually being able to take over the world and enslave or eradicate the human race, most experts believe that we’re just getting rolling with weak AI. Currently, AI and machine learning systems are being used to answer fact-based questions, recommend products, improve online security, prevent fraud, and perform a host of other specific tasks that rely on processing lots of data and identifying patterns in that data. Strong AI remains relegated to the world of science fiction.

Weak AI is more of an illusion of intelligence. Developers combine natural language processing (NLP) with pattern matching to enable machines to carry out spoken commands. The experience is not much different from using a search engine such as Google or Bing to answer questions or asking Netflix for movie recommendations. The only difference is that the latest generation of virtual assistants, including Apple's Siri, Microsoft's Cortana, and Amazon's Alexa, respond to spoken language and are able to talk back. They can even perform some basic tasks, such as placing a call, ordering a product online, or booking a reservation at your favorite restaurant.

In addition, the latest virtual assistants have the ability to learn. If they misinterpret what you say (or type), and you correct them, they learn from the mistake and are less likely to make the same mistake in the future. If you use a virtual assistant, you will notice that, over time, they become much more in tune with the way you express yourself. If someone else joins the conversation, the virtual assistant is much more likely to struggle to interpret what that person says.

This ability to respond to voice commands and adapt to different people's vocabulary and pronunciation may appear to be a sign of intelligent life, but it is all smoke and mirrors — valuable, but still weak AI.

Weak AI also includes expert systems — software programs that use databases of specialized knowledge along with processes, such as decision trees, to offer advice or make decisions in areas such as loan approvals, medical diagnosis, and choosing investments. In the U.S. alone, about 70 percent of overall trading volume is driven by algorithms and bots (short for robots). Expert systems are also commonly used to evaluate and approve or reject loan applications. Courts are also discussing the possibility of replacing judges with experts systems for more consistency (less bias) in rendering verdicts and punishments.

To create expert systems, software developers team up with experts in a given field to determine the data the experts need to perform specific tasks, such as choosing a stock, reviewing a loan application, or diagnosing an illness. The developers also work with the experts to figure out what steps the experts take in performing those tasks and the criteria they consider when making a decision or formulating an opinion. The developers then create a program that replicates, as closely as possible, what the expert does.

Developers may even program learning into an expert system, so that the system can improve over time as more data is collected and analyzed and as the system is corrected when it makes mistakes.

Although weak AI is still fairly powerful, it does have limitations, particularly in the following areas:

● Common sense: AI responds based on the available data — just the facts, as presented to it. It doesn't question the data, so if it has bad data, it may make bad decisions or offer bad advice. Specifically in the area of the stock market, bad data could trigger an unjustified selloff and, because the bots can execute trades so quickly, that could lead to considerable financial losses for investors.

● Emotion: Computers cannot offer the emotional understanding and support that people often need, although they can fake it to some degree with emotional language.

● Wisdom: While computers can store and recall facts, they lack the ability to discern less quantifiable qualities, such as character, commitment, and credibility — an ability that may be required in some applications.

● Creativity: Computers cannot match humans when it comes to innovation and may never be able to do so. While computers can plod forward to solve problems, they do not make the mental leaps that humans are capable of making. In exploring all the possibilities to solve a problem, for example, computers are susceptible to combinatorial explosions — a mathematical phenomenon in which the number of possible combinations increases beyond the computer's capability to explore all of them in a reasonable amount of time.

Given its limitations, weak AI is unlikely to ever replace human beings in certain roles and functions. A more likely scenario is what we are now seeing — people teaming up with AI systems to perform their jobs better and faster. While humans provide the creativity, emotion, common sense, and wisdom that machines lack, machines provide the speed, accuracy, thoroughness, and objectivity often lacking in humans.

In a previous post entitled "Playing the Imitation Game," I discussed Alan Turing's vision, published in 1936, of a single, universal machine that could be programmed to solve any particular problem. In 1959, Allen Newell and Herbert A. Simon took a different approach. Their goal was to develop a computer program that could function as a universal problem solver.

Newell and Simon - Courtesy Carnegie Mellon University Libraries

In theory, their general problem solver (GPS) would be able to solve any problem that could be presented in the form of specific types of mathematical formulas that are useful in programming logic. This type of problem would include geometric proofs, which start with definitions, axioms (statements accepted as fact), postulates, and previously proven theorems, and use logic to arrive at reasoned conclusions.

One of the problems GPS solved was the Tower of Hanoi — a game or puzzle consisting of three rods and a number of disks of different sizes, which can slide onto any rod.

When you start, all the disks are on one rod, ordered from largest to smallest from the bottom up. The goal is to move the entire stack to another rod in the least number of moves following these rules:

● Move only one disk at a time.

● Do not place a larger disk on top of a smaller one.

● Each move consists of taking the top disk from one stack and placing it on an empty rod or on the top of an existing stack.

The minimum number of moves to solve the Tower of Hanoi is 2n – 1, where n is the number of disks, so for three disks, the minimum number of moves is (2 x 2 x 2) – 1 = 7.

One of the key parts of the general problem solver was what Newell and Simon called the physical symbol system hypothesis (PSSH). According to Newell and Simon, "A physical symbol system has the necessary and sufficient means for general intelligent action." Such a system would be able to take patterns (symbols), combine them into structures (expressions), and manipulate them using various processes to produce new expressions.

Newell and Simon believed that human intelligence was no more than a complex physical symbol system. They thought that a key part of human reasoning consisted merely of connecting symbols — that our language, ideas, and concepts were just broad groupings of interconnected symbols. For example, when we see a chair or a picture of a chair, we associate it with the act of sitting. When we smell smoke, we associate it with fire, which is associated with danger, which may trigger a fight-or-flight response.

Newell and Simon argued that by feeding a machine enough physical symbols, creating a sufficient number of associations, and putting rules in place for combining symbols into structures and manipulating them to create new expressions, machines could be made to "think" like we humans do. This theory forms the basis of what drives most of machine learning and artificial intelligence to this day.

Not everyone buys into the notion that a physical symbol system is necessary and sufficient for human intelligence. In 1980, philosopher John Searle argued that merely connecting symbols could not be considered intelligence. To support his argument against the idea that manipulating physical symbols constituted intelligence, he presented what is commonly referred to as the Chinese room argument.

Imagine yourself, an English-only speaker, locked in a room with a narrow slot on the door through which you can pass notes. You have a book filled with long lists of statements in Chinese, and the floor is covered in Chinese characters. You are instructed that upon receiving a certain sequence of Chinese characters, you are to look up a corresponding response in the book and, using the characters strewn about the floor, formulate your response.

Someone outside the room who speaks and writes fluent Chinese writes a note on a sheet of paper and passes it to you through the slot on the door. Following the instructions you were given, you look up a response in the book, copy the response using characters from the floor to create your note, and pass it through the slot to the person who delivered the original message.

The native speaker may believe that the two of you are communicating and that you know the language. However, Searle argues that this is no proof of intelligence, because you have no understanding of the messages you are receiving or sending.

You can try a similar experiment with your smart phone. If you ask Siri or Alexa how she's feeling, she will answer your question even though she feels nothing at all. She doesn't even understand the question. This artificially "intelligent" being is merely matching your question to what is considered an acceptable answer and delivering that answer to you.

A huge obstacle to achieving artificial intelligence through a physical symbol system is what's known as combinatorial explosion — the rapid growth of symbol combinations that makes pattern-matching increasingly difficult. Combinatorial explosion is far greater than exponential growth. The formula for exponential growth can be expressed as y = 2x, whereas the formula for combinatorial explosion is y = x! (the factorial of x). For example, if x = 20, then

exponential growth: y = 2x = 220 = 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 x 2 = 1,048,576

combinatorial explosion: y = x! = 1 x 2 x 3 x 4 x 5 x 6 x 7 x 8 x 9 x 10 x 11 x 12 x 13 x 14 x 15 x 16 x 17 x 18 x 19 x 20 = 2,432,902,008,176,640,000

With each added symbol, the number of combinations increases dramatically. Considering all the possible combinations would require immense computational resources over a considerable amount of time.

Even with these challenges, pattern-matching has remained the cornerstone of artificial intelligence, regardless of whether it is even in the same ballpark as human intelligence.