So what are neural networks? An artificial neural network (often referred to simply as a neural network) is a computer system modeled after the structure of a biological brain that facilitates machine learning.

The human brain is composed of about 100 billion neurons that communicate with one another electrochemically across minute gaps called synapses. A single neuron can have up to 10,000 connections with other neurons. Working together, neurons are responsible for receiving sensory input from the external world, regulating bodily functions, controlling muscle movement, forming and recording memories and thoughts, and more.

Neurons increase the strength of their connections based on learning and practice. Whether you're studying a new language, learning to play a musical instrument, or training for the World Cup, your neurons strengthen existing connections and create new connections for developing the requisite knowledge and skills. That's why the more you practice the better you get; selected neurons build new and more efficient paths between and among one another. Eventually, with enough study and practice, you perform certain tasks with little to no conscious effort.

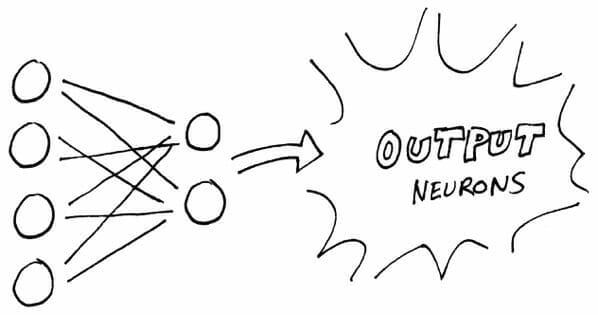

Instead of being made up of neurons, an artificial neural network consists of nodes. Each node receives input from one or more other nodes or from an external source and computes an output. A node's output is then sent to one or more other nodes in the neural network or is communicated to the outside world. This communication might be as the answer to a question or as the solution to a problem.

Nodes are arranged in layers: an input layer, hidden layers, and an output layer. Data (such as a spoken word or phrase, an image, or a question) enters the input layer, is processed in the hidden layers, and the result is delivered via the output layer.

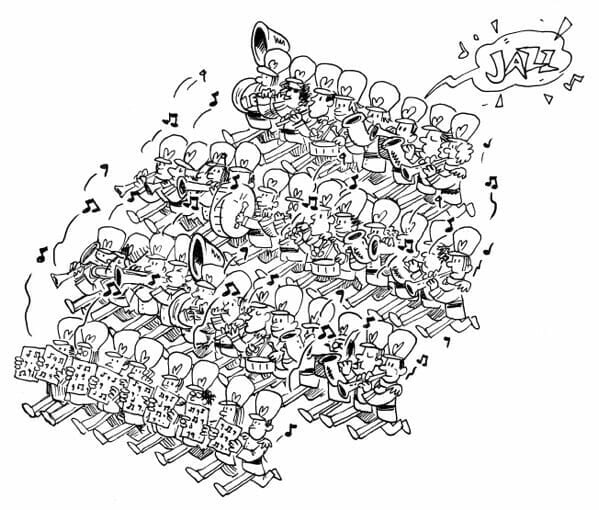

Picture nodes in a neural network as players in a marching band and each row of band members as a layer. Assume that none of the players knows the music to be played or how to move during the performance. Only the front row of band members can see the band leader (the drum major). The drum major gives the first row a signal that's passed through the remaining rows (layers), enabling all players to coordinate their movements and the playing of their instruments.

At first, players would be bumping into one another and playing the wrong notes, but with more and more practice, the players would get in sync and perform as a unit. They would learn.

To smooth the learning curve, the band creates a system that enables band members to provide feedback. As they move and play, the band members choose numbers that indicate their level of confidence (say from 0 to 100 percent) that they are doing it right. Based on each band member's confidence level, neighboring band members make small adjustments and then check to see whether their adjustments increased or decreased their neighbor's confidence level. The goal is to achieve a 100 percent confidence level for all band members.

The idea here is that this neural marching band network will learn on its own without additional input or correction from an outside source. Theoretically, at least, the nodes will eventually make enough small adjustments to produce the correct output (a stellar performance) through trial and error, learning from their mistakes.

As you can imagine, learning by trial and error can be very chaotic and time-consuming, especially when you have multiple entities making their own adjustments based on input from numerous other entities. In the case of our fictional marching band, band members would be bumping into one another and playing the wrong notes for hours, days, or weeks before they actually coordinated their efforts.

To overcome this challenge, AI developers attempt to tweak the network to make it more efficient. For example, suppose you gave more weight to feedback from the drummers because they set the rhythm. Perhaps you give their confidence level four times the importance as other band members. Now, when the band members make adjustments, they look more to the drummers to determine the net impact of the adjustments they made, and the marching band learns much faster.

Eventually, the band delivers a nicely choreographed and well-orchestrated performance to the output layer. If this were a neural network, the output could then be stored, and whenever instructed to do so, it could repeat its performance. In addition, the strengthened connections between certain neurons might make learning new musical arrangements easier.

In one of my previous posts Solve General Problems with Artificial Intelligence, I discussed an approach to artificial intelligence (AI) referred to as the physical symbol system hypothesis (PSSH). The theory behind this approach is that human intelligence consists of the ability to take symbols (recognizable patterns), combine them into structures (expressions), and manipulate them using various processes to produce new expressions.

As philosopher John Searle pointed out with his Chinese room argument, this ability, in and of itself, does not constitute intelligence, because it requires no understanding of the symbols or expressions. For example, if you ask your virtual assistant (Siri, Alexa, Bixby, Cortana, etc.) a question, it searches through a list of possible responses, chooses one, and provides that as the answer. It doesn't understand the question and has no desire to answer the question or to provide the correct answer.

AI built on the PSSH is proficient at storing lots of data (memorization) and pattern-matching, but it is not so good at learning. Learning requires the ability not only to memorize but also to generalize and specify.

Human evolution has made us experts at memorization, generalization, and specification. To a large degree, it is how we learn. We record (memorize) details about our environment, experiences, thoughts, and feelings; form generalizations that enable us to respond appropriately to similar environments and experiences in the future; and then fine-tune our impressions through specification. For example, if you try to pet a dog, and it snaps at you, you may generalize to avoid dogs in the future. However, over time, you develop more nuanced thoughts about dogs—that not all dogs snap when you try to pet them and that there are certain ways to approach dogs that make them less likely to snap at you.

The combination of memorization, generalization, and specification is a valuable survival skill. It also plays a key role in enabling machine learning — providing machines with the ability to recognize unfamiliar patterns based on what they already "know" about familiar patterns.

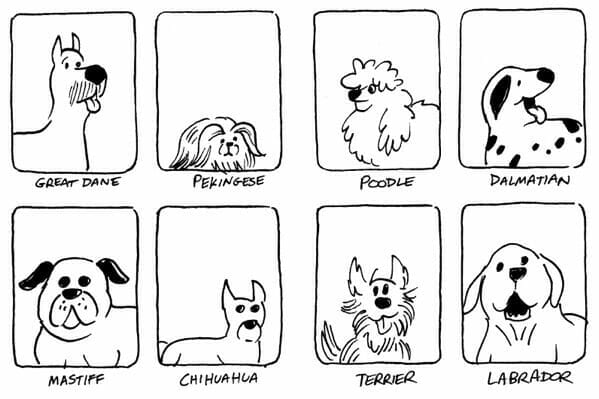

Look at the following eight images. You can tell that the eight images represent different breeds of dogs, even though these aren't photographs of actual dogs.

You know that these are all pictures of dogs, because you have encountered many dogs in your life (in person, in photos and drawings, and in videos), and you have formed in your mind an abstract idea of what a dog looks like.

In their early stages of learning, children often overgeneralize. For example, upon learning the word "dog," they call all furry creatures with four legs "dog." To these children, cats are dogs, cows are dogs, sheep are dogs, and so on. This is where specification comes into play. As children encounter different species of four-legged mammals, they begin to identify the qualities that make them distinct.

Computers are far better at memorization and far worse at generalization and specification. The computer could easily memorize the eight images of dogs, but if you fed the computer a ninth image of a dog, as shown below, it would likely struggle to match it to one of the existing images and identify it as an image of a dog. In other words, the computer would quickly master memorization and pattern-matching but struggle to learn due to its inability to generalize and specify.

For this same reason, language translation programs have always struggled with accuracy. Developers have created physical symbol systems that translate words and phrases from one language to another, but these programs never really learn the language. Instead, they function merely as an old-fashioned foreign language dictionary and phrasebook — quickly looking up words and phrases in the source language to find the matching word or phrase in the destination language and then stitching together the words and phrases to provide the translation. These translation programs often fail when they encounter unfamiliar words, phrases, and even syntax (word order).

Currently, machines have the ability to learn. The big challenge in the future of AI learning and machine learning will be to enable machines to do a better job of generalizing and specifying. Instead of merely matching memorized symbols, newer machines will create abstract models based on the patterns they observe (the patterns they are fed). These models will have the potential to help these machines learn more effectively, so they can more accurately interpret unfamiliar future input.

As machines become better at generalizing and specializing, they will achieve greater levels of intelligence. It remains to be seen, however, whether machines will ever have the capacity to develop self-awareness and self-determination — key characteristics of human intelligence.