Fueling the rise of machine learning and deep learning is the availability of massive amounts of data, often referred to as big data. If you wanted to create an AI program to identify pictures of cats, you could access millions of cat images online. The same is true, or more true, of other types of data. Various organizations have access to vast amounts of data, including charge card transactions, user behaviors on websites, data from online games, published medical studies, satellite images, online maps, census reports, voter records, economic data and machine-generated data (from machines equipped with sensors that report the status of their operation and any problems they detect). So what is the relationship between AI and big data?

This treasure trove of data has given machine learning a huge advantage over symbolic systems. Having a neural network chew on gigabytes of data and report on it is much easier and quicker than having an expert identify and input patterns and reasoning schemas to enable the computer to deliver accurate responses.

In some ways the evolution of machine learning is similar to how online search engines evolved. Early on, users would consult website directories such as Yahoo! to find what they were looking for — directories that were created and maintained by humans. Website owners would submit their sites to Yahoo! and suggest the categories in which to place them. Yahoo! personnel would then vet the sites and add them to the directory or deny the request. The process was time-consuming and labor-intensive, but it worked well when the web had relatively few websites. When the thousands of websites proliferated into millions and then crossed the one billion threshold, the system broke down fairly quickly. Human beings couldn’t work quickly enough to keep the Yahoo! directories current.

In the mid-1990s Yahoo! partnered with a smaller company called Google that had developed a search engine to locate and categorize web pages. Google’s first search engine examined backlinks (pages that linked to a given page) to determine the relevance and authority of the given page and rank it accordingly in its search results. Since then, Google has developed additional algorithms to determine a page’s rank (or relevance); for example, the more users who enter the same search phrase and click the same link, the higher the ranking that page receives. This approach is similar to the way neurons in an artificial neural network strengthen their connections.

The fact that Google is one of the companies most enthusiastic about AI is no coincidence. The entire business has been built on using machines to interpret massive amounts of data. Rosenblatt's preceptrons could look through only a couple grainy images. Now we have processors that are at least a million times faster sorting through massive amounts of data to find content that’s most likely to be relevant to whatever a user searches for.

Deep learning architecture adds even more power, enabling machines to identify patterns in data that just a few decades ago would have been nearly imperceptible. With more layers in the neural network, it can perceive details that would go unnoticed by most humans. These deep learning artificial networks look at so much data and create so many new connections that it’s not even clear how these programs discover the patterns.

A deep learning neural network is like a black box swirling together computation and data to determine what it means to be a cat. No human knows how the network arrives at its decision. Is it the whiskers? Is it the ears? Or is it something about all cats that we humans are unable to see? In a sense, the deep learning network creates its own model for what it means to be a cat, a model that as of right now humans can only copy or read, but not understand or interpret.

In 2012, Google’s DeepMind project did just that. Developers fed 10 million random images from YouTube videos into a network that had over 1 billion neural connections running on 16,000 processors. They didn’t label any of the data. So the network didn’t know what it meant to be a cat, human or a car. Instead the network just looked through the images and came up with its own clusters. It found that many of the videos contained a very similar cluster. To the network this cluster looked like this.

A “cat” from “Building high-level features using large scale unsupervised learning”

Now as a human you might recognize this as the face of a cat. To the neural network this was just a very common something that it saw in many of the videos. In a sense it invented its own interpretation of a cat. A human might go through and tell the network that this is a cat, but this isn’t necessary for the network to find cats in these videos. In fact the network was able to identify a “cat” 74.8% of the time. In a nod to Alan Turing, the Cato Institute’s Julian Sanchez called this the “Purring Test.”

If you decide to start working with AI, accept the fact that your network might be sensing things that humans are unable to perceive. Artificial intelligence is not the same as human intelligence, and even though we may reach the same conclusions, we’re definitely not going through the same process.

In one of my previous posts "The General Problem Solver," I discuss the debate over whether a physical symbol system is necessary and sufficient for intelligence. The developers of one of the early AI programs were convinced it did, but philosopher John Searle presented his Chinese room argument as a rebuttal to this theory. Searle concluded that a machine's ability to store and match symbols (patterns) to find the answers to questions or to solve problems does not constitute intelligence. Instead, intelligence requires an understanding of the question or problem, which a machine does not have.

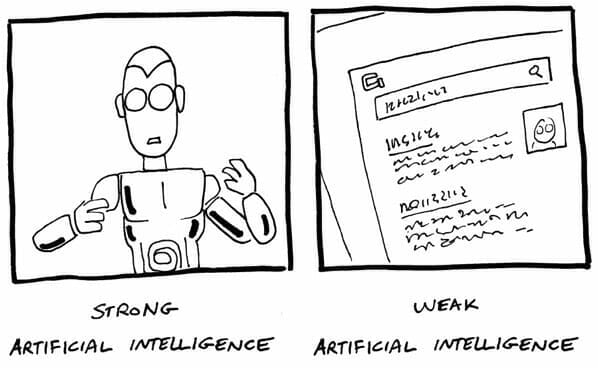

Searle went on to distinguish between two types of artificial "intelligence" — weak AI and strong AI:

● Weak AI is confined to a very narrow task, such as Netflix recommending movies or TV shows based on a customer's viewing history, finding a website that is likely to contain the content a user is looking for, or analyzing loan applications and determining whether a candidate should be approved or rejected. A weak AI program does not understand questions, experience emotions, care about anything or anyone, or learn for the sake of learning. It merely performs whatever task it was designed to do.

● Strong AI exhibits human characteristics, such as emotions, understanding, wisdom, and creativity. Examples of strong AI come from science fiction—HAL (the Heuristically programmed ALgorithmic computer) that controls the spaceship in 2001: A Space Odyssey, Lieutenant Commander Data from Star Trek, and C3PO and R2-D2 from Star Wars. These mechanical, computerized beings have emotions, self-awareness, and even a sense of humor. They are machines capable of learning new tasks — not limited to the tasks they have been programmed to perform.

Just Getting Started

Contrary to what science fiction writers want us to believe and the dark warnings in the media about intelligent robots eventually being able to take over the world and enslave or eradicate the human race, most experts believe that we’re just getting rolling with weak AI. Currently, AI and machine learning systems are being used to answer fact-based questions, recommend products, improve online security, prevent fraud, and perform a host of other specific tasks that rely on processing lots of data and identifying patterns in that data. Strong AI remains relegated to the world of science fiction.

Weak AI is more of an illusion of intelligence. Developers combine natural language processing (NLP) with pattern matching to enable machines to carry out spoken commands. The experience is not much different from using a search engine such as Google or Bing to answer questions or asking Netflix for movie recommendations. The only difference is that the latest generation of virtual assistants, including Apple's Siri, Microsoft's Cortana, and Amazon's Alexa, respond to spoken language and are able to talk back. They can even perform some basic tasks, such as placing a call, ordering a product online, or booking a reservation at your favorite restaurant.

In addition, the latest virtual assistants have the ability to learn. If they misinterpret what you say (or type), and you correct them, they learn from the mistake and are less likely to make the same mistake in the future. If you use a virtual assistant, you will notice that, over time, they become much more in tune with the way you express yourself. If someone else joins the conversation, the virtual assistant is much more likely to struggle to interpret what that person says.

This ability to respond to voice commands and adapt to different people's vocabulary and pronunciation may appear to be a sign of intelligent life, but it is all smoke and mirrors — valuable, but still weak AI.

Weak AI also includes expert systems — software programs that use databases of specialized knowledge along with processes, such as decision trees, to offer advice or make decisions in areas such as loan approvals, medical diagnosis, and choosing investments. In the U.S. alone, about 70 percent of overall trading volume is driven by algorithms and bots (short for robots). Expert systems are also commonly used to evaluate and approve or reject loan applications. Courts are also discussing the possibility of replacing judges with experts systems for more consistency (less bias) in rendering verdicts and punishments.

To create expert systems, software developers team up with experts in a given field to determine the data the experts need to perform specific tasks, such as choosing a stock, reviewing a loan application, or diagnosing an illness. The developers also work with the experts to figure out what steps the experts take in performing those tasks and the criteria they consider when making a decision or formulating an opinion. The developers then create a program that replicates, as closely as possible, what the expert does.

Developers may even program learning into an expert system, so that the system can improve over time as more data is collected and analyzed and as the system is corrected when it makes mistakes.

Although weak AI is still fairly powerful, it does have limitations, particularly in the following areas:

● Common sense: AI responds based on the available data — just the facts, as presented to it. It doesn't question the data, so if it has bad data, it may make bad decisions or offer bad advice. Specifically in the area of the stock market, bad data could trigger an unjustified selloff and, because the bots can execute trades so quickly, that could lead to considerable financial losses for investors.

● Emotion: Computers cannot offer the emotional understanding and support that people often need, although they can fake it to some degree with emotional language.

● Wisdom: While computers can store and recall facts, they lack the ability to discern less quantifiable qualities, such as character, commitment, and credibility — an ability that may be required in some applications.

● Creativity: Computers cannot match humans when it comes to innovation and may never be able to do so. While computers can plod forward to solve problems, they do not make the mental leaps that humans are capable of making. In exploring all the possibilities to solve a problem, for example, computers are susceptible to combinatorial explosions — a mathematical phenomenon in which the number of possible combinations increases beyond the computer's capability to explore all of them in a reasonable amount of time.

Given its limitations, weak AI is unlikely to ever replace human beings in certain roles and functions. A more likely scenario is what we are now seeing — people teaming up with AI systems to perform their jobs better and faster. While humans provide the creativity, emotion, common sense, and wisdom that machines lack, machines provide the speed, accuracy, thoroughness, and objectivity often lacking in humans.