Prior to starting an AI project, the first choice you need to make is whether to use an expert system (a rules based system) or machine learning. Basically the choice comes down to the amount of data, the variation in that data and whether you have a clear set of steps for extracting a solution from that data. An expert system is best when you have a sequential problem and there are finite steps to find a solution. Machine learning is best when you want to move beyond memorizing sequential steps, and you need to analyze large volumes of data to make predictions or to identify patterns that you may not even know would provide insight — that is, when your problem contains a certain level of uncertainty.

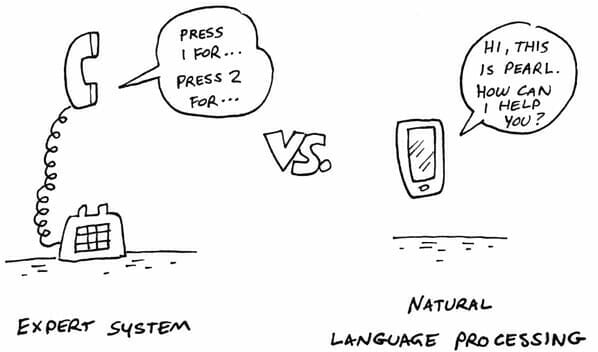

Think about it in terms of an automated phone system.

Older phone systems are sort of like expert systems; a message tells the caller to press 1 for sales, 2 for customer service, 3 for technical support and 4 to speak to an operator. The system then routes the call to the proper department based on the number that the caller presses.

Newer, more advanced phone systems use natural language processing. When someone calls in, the message tells the caller to say what they’re calling about. A caller may say something like, “I’m having a problem with my Android smart phone,” and the system routes the call to technical support. If, instead, the caller said something like, “I want to upgrade my smartphone,” the system routes the call to sales.

The challenge with natural language processing is that what callers say and how they say it is uncertain. An angry caller may say something like “That smart phone I bought from you guys three days ago is a piece of junk.” You can see that this is a more complex problem. The automated phone system would need accurate speech recognition and then be able to infer the meaning of that statement so that it could direct the caller to the right department.

With an expert system, you would have to manually input all the possible statements and questions, and the system would still run into trouble when a caller mumbled or spoke with an accent or spoke in another language.

In this case, machine learning would be the better choice. With machine learning, the system would get smarter over time as it created its own patterns. If someone called in and said something like, “I hate my new smart phone and want to return it,” and they were routed to sales and then transferred to customer service, the system would know that the next time someone called and mentioned the word “return,” that call should be routed directly to customer service, not sales.

When you start an AI program, consider which approach is best for your specific use case. If you can draw a decision tree or flow chart to describe a specific task the computer must perform based on limited inputs, then an expert system is probably the best choice. It may be easier to set up and deploy, saving you time, money and the headaches of dealing with more complex systems. If, however, you’re dealing with massive amounts of data and a system that must adapt to changing inputs, then machine learning is probably the best choice.

Some AI experts mix these two approaches. They use an expert system to define some constraints and then use machine learning to experiment with different answers. So you have three choices — an expert system, machine learning or a combination of the two.

In one of my previous posts Solve General Problems with Artificial Intelligence, I discussed an approach to artificial intelligence (AI) referred to as the physical symbol system hypothesis (PSSH). The theory behind this approach is that human intelligence consists of the ability to take symbols (recognizable patterns), combine them into structures (expressions), and manipulate them using various processes to produce new expressions.

As philosopher John Searle pointed out with his Chinese room argument, this ability, in and of itself, does not constitute intelligence, because it requires no understanding of the symbols or expressions. For example, if you ask your virtual assistant (Siri, Alexa, Bixby, Cortana, etc.) a question, it searches through a list of possible responses, chooses one, and provides that as the answer. It doesn't understand the question and has no desire to answer the question or to provide the correct answer.

AI built on the PSSH is proficient at storing lots of data (memorization) and pattern-matching, but it is not so good at learning. Learning requires the ability not only to memorize but also to generalize and specify.

Human evolution has made us experts at memorization, generalization, and specification. To a large degree, it is how we learn. We record (memorize) details about our environment, experiences, thoughts, and feelings; form generalizations that enable us to respond appropriately to similar environments and experiences in the future; and then fine-tune our impressions through specification. For example, if you try to pet a dog, and it snaps at you, you may generalize to avoid dogs in the future. However, over time, you develop more nuanced thoughts about dogs—that not all dogs snap when you try to pet them and that there are certain ways to approach dogs that make them less likely to snap at you.

The combination of memorization, generalization, and specification is a valuable survival skill. It also plays a key role in enabling machine learning — providing machines with the ability to recognize unfamiliar patterns based on what they already "know" about familiar patterns.

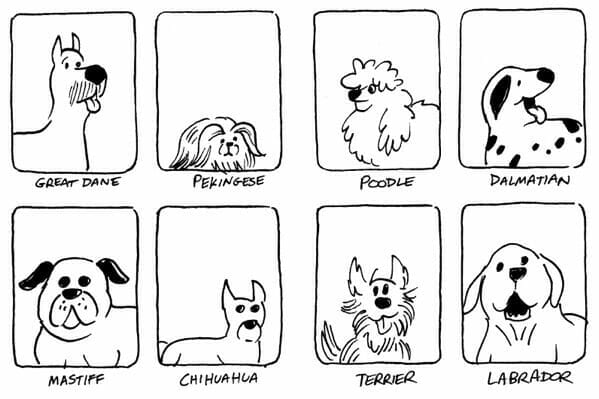

Look at the following eight images. You can tell that the eight images represent different breeds of dogs, even though these aren't photographs of actual dogs.

You know that these are all pictures of dogs, because you have encountered many dogs in your life (in person, in photos and drawings, and in videos), and you have formed in your mind an abstract idea of what a dog looks like.

In their early stages of learning, children often overgeneralize. For example, upon learning the word "dog," they call all furry creatures with four legs "dog." To these children, cats are dogs, cows are dogs, sheep are dogs, and so on. This is where specification comes into play. As children encounter different species of four-legged mammals, they begin to identify the qualities that make them distinct.

Computers are far better at memorization and far worse at generalization and specification. The computer could easily memorize the eight images of dogs, but if you fed the computer a ninth image of a dog, as shown below, it would likely struggle to match it to one of the existing images and identify it as an image of a dog. In other words, the computer would quickly master memorization and pattern-matching but struggle to learn due to its inability to generalize and specify.

For this same reason, language translation programs have always struggled with accuracy. Developers have created physical symbol systems that translate words and phrases from one language to another, but these programs never really learn the language. Instead, they function merely as an old-fashioned foreign language dictionary and phrasebook — quickly looking up words and phrases in the source language to find the matching word or phrase in the destination language and then stitching together the words and phrases to provide the translation. These translation programs often fail when they encounter unfamiliar words, phrases, and even syntax (word order).

Currently, machines have the ability to learn. The big challenge in the future of AI learning and machine learning will be to enable machines to do a better job of generalizing and specifying. Instead of merely matching memorized symbols, newer machines will create abstract models based on the patterns they observe (the patterns they are fed). These models will have the potential to help these machines learn more effectively, so they can more accurately interpret unfamiliar future input.

As machines become better at generalizing and specializing, they will achieve greater levels of intelligence. It remains to be seen, however, whether machines will ever have the capacity to develop self-awareness and self-determination — key characteristics of human intelligence.

AI Planning Systems is a branch of Artificial Intelligence whose purpose is to identify strategies and action sequences that will, with a reasonable degree of confidence, enable the AI program to deliver the correct answer, solution, or outcome.

As I explained in a previous article Solve General Problems with Artificial Intelligence, one of the limitations of early AI, which was based on the physical symbol system hypothesis (PSSH), is combinatorial explosion — a mathematical phenomenon in which the number of possible combinations increases beyond the computer's capability to explore all of them in a reasonable amount of time.

AI planning attempts to solve the problem of combinatorial explosion by using something called heuristic reasoning — an approach that attempts to give artificial intelligence a form of common sense. Heuristic reasoning enables an AI program to rule out a large number of possible combinations by identifying them as impossible or highly unlikely. This approach is sometimes referred to as "limiting the search space."

A heuristic is a mental shortcut or rule-of-thumb that enables people to solve problems and make decisions quickly. For example, the Rule of 72 is a heuristic for estimating the number of years it would take an investment to double your money. You divide 72 by the rate of return, so an investment with a 6% rate of return would double your money in about 72/6 = 12 years.

Heuristic reasoning is common in innovation. Inventors rarely consider all the possibilities for solving a particular problem. Instead, they start with an idea, a hypothesis, or a hunch based on their knowledge and prior experience, then they start experimenting and exploring from that point forward. If they were to consider all the possibilities, they would waste considerable time, effort, energy, and expertise on futile experiments and research.

With AI planning, you might combine heuristic reasoning with a physical symbol system to improve performance. For example, imagine heuristic reasoning applied to the Chinese room experiment I introduced in my previous post on the general problem solver.

In the Chinese room scenario, you, an English-only speaker, are locked in a room with a narrow slot on the door through which notes can pass. You have a book filled with long lists of statements in Chinese, and the floor is covered in Chinese characters. You are instructed that upon receiving a certain sequence of Chinese characters, you are to look up a corresponding response in the book and, using the characters strewn about the floor, formulate your response.

What you do in the Chinese room is very similar to how AI programs work. They simply identify patterns, look up entries in a database that correspond to those patterns, and output the entries in response.

With the addition of heuristic reasoning, AI could limit the possibilities of the first note. For example, you could program the software to expect a message such as "Hello" or "How are you?” In effect, this would limit the search space, so that the AI program had to search only a limited number of records in its database to find an appropriate response. It wouldn't get bogged down searching the entire database to consider all possible messages and responses.

The only drawback is that if the first message was not one of those that was anticipated, the AI program would need to search its entire database.

Heuristic reasoning is commonly employed in modern AI applications. For example, if you enter your location and destination in a GPS app, the app doesn't search its vast database of source data, which consists of satellite and aerial imagery; state, city, and county maps; the US Geological Survey; traffic data; and so on. Instead, it limits the search space to the area that encompasses the location and destination you entered. In addition, it limits the output to the fastest or shortest route (not both) depending on which setting is in force, and it likely omits a great deal of detail from its maps to further expedite the process.

The goal is to deliver an accurate map and directions, in a reasonable amount of time, that lead you from your current location to your desired destination as quickly as possible. Without the shortcuts to the process provided by heuristic reasoning, the resulting combinatorial explosion would leave you waiting for directions... possibly for the rest of your life.

Even though many of the modern AI applications are built on what are now considered old-fashioned methods, AI planning allows for the intelligent combination of these methods, along with newer methods, to build AI applications that deliver the desired output. The resulting applications can certainly make computers appear to be intelligent beings — providing real-time guidance from point A to point B, analyzing contracts, automating logistics, and even building better video games.

If you're considering a new AI project, don't be quick to dismiss the benefits of good old-fashioned AI (GOFAI). Newer approaches may not be the right fit.

To understand the concept of artificial intelligence, we must first start by defining intelligence. According to the dictionary definition, intelligence is a "capacity for learning, reasoning, understanding, and similar forms of mental activity; aptitude for grasping truths, relationships, facts, meanings, etc." This definition is broad enough to cover both human and computer (artificial) intelligence. Both people and computers can learn, reason, understand relationships, distinguish facts from falsehoods and so forth.

However, some definitions of intelligence raise the bar to include consciousness or self-awareness, wisdom, emotion, sympathy, intuition and creativity. In some definitions, intelligence also involves spirituality — a connection to a greater force or being. These definitions separate natural, human intelligence from artificial intelligence, at least in the current, real world. In science fiction, in futuristic worlds, artificially intelligent computers and robots often make the leap to self-consciousness and self-determination, which leads to conflict with their human creators. In The Terminator, artificial intelligence leads to all-out war between humans and the intelligent machines they created.

A further challenge to our ability to define “intelligence” is the fact that human intelligence comes in many forms and often includes the element of creativity. While computers can be proficient at math, repetitive tasks, playing certain games (such as chess), and anything else a human being can program them to do (or to learn to do), people excel in a variety of fields, including math, science, art, music, politics, business, medicine, law, linguistics and so on.

Another challenge to defining intelligence is that we have no definitive standard for measuring it. We do have intelligent quotient (IQ) tests, but a typical IQ test evaluates only short-term memory, analytical thinking, mathematical ability and spatial recognition. In high school, we take ACTs and SATs to gauge our mastery of what we should have learned in school, but the results from those tests don't always reflect a person's true intelligence. In addition, while some people excel in academics, others are skilled in trades or have a higher level of emotional competence or spirituality. There are also people who fail in school but still manage to excel in business, politics, or their chosen careers.

Without a reliable standard for measuring human intelligence, it’s very difficult to point to a computer and say that it's behaving intelligently. Computers are certainly very good at performing certain tasks and may do so much better and faster than humans, but does that make them intelligent? For example, computers have been able to beat humans in chess for decades. IBM Watson beat some of the best champions in the game show Jeopardy. Google's DeepMind has beaten the best players in the 2,500-year-old Chinese game called “Go” — a game so complex that there are thought to be more possible configurations of the board than there are atoms in the universe. Yet none of these computers understands the purpose of a game or has a desire to play.

As impressive as these accomplishments are, they are still just a product of a computer’s special talent for pattern-matching. Pattern-matching is what happens when a computer extracts information from its database and uses that information to answer a question or perform a task. This seems to be intelligent behavior only because a computer is excellent at that particular task. However, excellence at performing a specific task is not necessarily a reflection of intelligence in a human sense. Just because a computer can beat a chess master does not mean that the computer is more intelligent. We generally don't measure a machine's capability in human terms—for example, we don't describe a boat as swimming faster than a human or a hydraulic jack as being stronger than a weightlifter—so it makes little sense to describe a computer as being smarter or more intelligent just because it is better at performing a specific task.

A computer's proficiency at pattern-matching can make it appear to be intelligent in a human sense. For example, computers often beat humans at games traditionally associated with intelligence. But games are the perfect environments for computers to mimic human intelligence through pattern-matching. Every game has specific rules with a certain number of possibilities that can be stored in a database. When IBM's Watson played Jeopardy, all it needed to do was use natural language processing (NLP) to understand the question, buzz in faster than the other contestants, and apply pattern-matching to find the correct answer in its database.

Early AI developers knew that computers had the potential to excel in a world of fixed rules and possibilities. Only a few years after the first AI conference, developers had their first version of a chess program. The program could match an opponent’s move with thousands of possible counter moves and play out thousands of games to determine the potential ramifications of making a move before deciding which piece to move and where to move it, and it could do so in a matter of seconds.

Artificial intelligence is always more impressive when computers are on their home turf — when the rules are clear and the possibilities limited. Organizations that benefit most from AI are those that work within a well-defined space with set rules, so it’s no surprise that organizations like Google fully embrace AI. Google’s entire business involves pattern-matching — matching users’ questions with a massive database of answers. AI experts often refer to this as good old-fashioned artificial intelligence (GOFAI).

If you're thinking about incorporating AI in your business, consider what computers are really good at — pattern-matching. Do you have a lot of pattern-matching in your organization? Does a lot of your work have set rules and possibilities? It will be this work that is first to benefit from AI.