The success of any data science initiative hinges on the team's ability to ask interesting questions that are relevant to the organization's success and the team's ability and willingness to challenge assumptions and beliefs. After all, without questions, you can have no answers. However, asking compelling questions and challenging long-held beliefs that have become accepted as facts can be a significant challenge, especially in organizations with strict hierarchies that discourage questioning and the challenging of authority.

If your data science team is struggling to come up with compelling questions and hesitates to challenge assumptions, the suggestions I present in this post can get the ball rolling. Getting started is the most difficult part. As soon as the team gets into the swing of asking questions and questioning beliefs, it will have no shortage of follow-up questions and problems to investigate.

One of the best ways to encourage data science team members to ask questions and challenge beliefs is to build an environment that's conducive to the free exchange of ideas. The research lead is ultimately responsible and can start to nurture the free exchange of ideas by modeling the desired behavior — listening and learning without judging. Everyone on the team should engage in deep listening— focused listening that enables them to hear and understand what others are saying, ignoring any initial impulse to judge what they hear. Team members need to recognize that they have plenty of time later to analyze what they hear, but the first step is to fully understand what the other person is getting at.

A good way to encourage questions and reinforce deep listening is to conduct question meetings. In these meetings, the research lead should encourage participants to ask questions before making statements. This techniques is sometimes called a "question first" approach. These meetings are about eliciting the maximum number of questions. They’re focused on everyone asking their questions and listening. Ban smartphones, laptops, and other electronic devices from these meetings. Everyone should focus on listening, although you may want to assign one person in the meeting the task of taking notes.

Although question meetings are mostly unstructured, consider starting the meeting like this:

Avoid quick statements that are likely to limit the scope of the discussion, such as "The CEO suspects that we are losing market share due to the recent reorganization of our marketing department." Such statements keep people from coming up with their best ideas. Remember that it’s the discussion that gives your team the greatest value. You want the team to consider all possibilities.

After a question meeting, you should have plenty of questions — far more than you need and some far more valuable than others. Now it's time to pan for gold — to identify the few questions you want your team to explore.

When evaluating questions, it often helps to categorize questions as open- or close-ended and then identify individual questions as essential or non-essential:

If you’re the research lead, make sure that the team is not asking too many of any one type of question. Too many open-ended questions can result in the team spending too much time wondering and not enough time exploring the data. Too many close-ended questions can result in too much time digging up facts and too little time looking at the big picture.

You can also categorize questions as essential and non-essential:

If you’re a fan of detective shows, you’ve probably seen a crime wall. That’s when a detective tries to figure out all the different pieces of an unsolved mystery. He or she puts up pictures and notes on a wall and tries to connect the different pieces. The board becomes a visual story. That’s why you’ll often see the detective sitting on the floor staring at the board trying to pull together the story from all the little mysteries in the data.

Your data science team will have a similar challenge. They’ll try to tell a story but they’ll only have pieces of the puzzle. Your team can use the same technique to create a question board—a place where they can see all the questions and data. That way they can tell a larger story.

Creating a question board is a great way to display ideas and solicit questions from your team and the rest of the organization. At the very top of the board, you should put a simple identifier such as “question board” or “ask a question.” The question board is a clear way to communicate and organize them in one place.

Your data science team should have dozens or even hundreds of different questions. The question board will likely be a key meeting point for the team as well as a great place for team members and stakeholders to talk about the project.

To start, place your question board next to someone’s desk on the team or in a hallway. Open spaces aren’t good for a question board. You’ll want people to stand next to the board and read the questions. Another suggestion is to put the board next to an area with a lot of traffic. Ideal places are next to the water cooler, snack bar, or bathroom. It should be a place where several team members can meet and not distract other people.

Usually, the best way to organize your board is to use different color sticky notes. You’ll want to organize your board from top to bottom. The sticky notes at the top of the board contain your essential questions. Use red or pink sticky notes for these questions. Below them, you can use yellow sticky notes for nonessential questions. Remember that these are questions that address smaller issues. They are usually closed questions with a correct answer. Finally, you can use white or purple sticky notes for results. These are little data points that the team discovered that might help address the question.

There are five major benefits to having a question board:

Remember that you want your team to have deep discussions. Everyone should be able to question each other’s reasoning. The team should listen to each other’s questions and try to come up with questions of their own. They should be focused on learning and not judging the quality of their questions.

The question board helps with this because it provides a place for people to focus their discussions. It also helps the team stand up and participate physically and come up with new ideas.

Many of your questions will be interconnected. Often, you’ll have essential questions that are connected to several closed, nonessential questions. If it’s on the wall, you can use string to show these connections. If it’s on a whiteboard, you can just draw different colored lines. This will help your team stay organized and even prioritize their highest value questions.

The question board will invite other people outside your team to participate. You might want to leave a stack of green sticky notes next to the board. Leave a marker and a small note that invites other people to add their own questions. Sometimes these questions from outside the team tell the most interesting stories.

Your question board will be a key part of communicating your data science story. It should have the questions that your team is working to address. It may also have little bits of data that suggest some answers. A good question board encourages other people to participate and tempts people to be part of your shared story.

One of the challenges of a question board is to have it filled with questions and keeping it well organized. Since it’s designed for a group discussion, you want everyone to be able to share the same information. It shouldn’t have several different groups of one person’s notes. If each group only has one person’s ideas, that one person will be the only one to understand its meaning.

Instead, all your questions should be organized using the same system. One of the best ways to do this is by creating question trees. A question tree is a group of sticky notes all related to one essential question. You’ll want to have the essential questions as the most attention grabbing color. Usually this is either red or pink.

Let’s imagine a question board for our running shoe website. One question that your team came up with is, “Can our website help encourage non-runners become runners?” If you’re the research lead for the team, you want to put this essential question on a red sticky at the very top of the board.

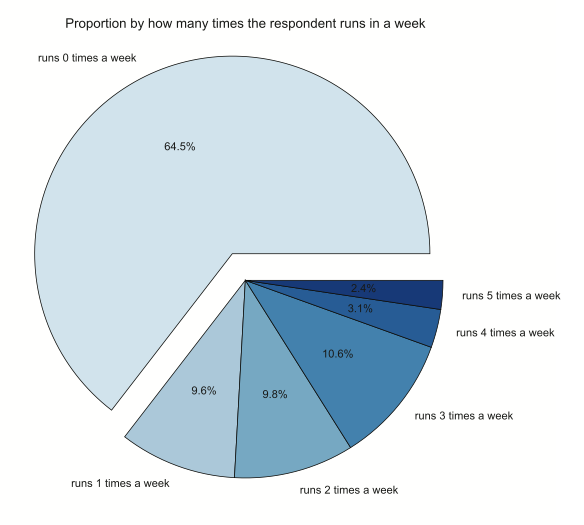

Underneath that essential question, you can start adding other questions. It could be another essential question such as, “What makes people run?” It could also be a nonessential question like, “Do non-runners shop on our site?” Since this is a closed question, you could put a little data sticky next to the yellow question sticky. Maybe something like, “Data suggest that 65% of our customers don’t run in a given week.” You could use a pie chart like the one shown below to illustrate this point.

Assume that this generated data comes from a survey that the company did on its customers. The question asked, “How many times, on average, do you run per week?” When you look at the data, you see that about 65% of the respondents don't run at all. 55% of the respondents run more than once per week.

Someone looking at the question tree should be able to follow the thought process of the team. She should see that the lower branches of questions started with one open-ended essential question (“Can our website help encourage non-runners become runners?”) and see the team addressing that question. She should be able to follow it all the way down to different branches.

Let’s say that the question, “What makes people run?”, branches off in its own direction. Underneath that question is another question that says, “Do they run to relieve stress?” Underneath that is another question that says, “Can non-runners who are stressed see the benefits of running?”'

With the question tree, the research lead now has a report to show progress to the rest of the organization. She could show that the data science team is working on several high-value questions simultaneously. It shouldn’t be too difficult to see how gaining insight into creating customers might increase revenue.

The question trees help the research lead connect the team’s work to real business value. A question board should have several questions trees. At the very top of the board, there should be several red or pink essential questions. Each of these should branch down like an upside down tree into several other questions. Be sure to use different color sticky notes as discussed previously (essential questions red or pink and nonessential questions yellow). Sometimes open questions will branch off into different question trees and you should end closed questions with little sticky notes that show the data.

Like any tree you’re going to want to prune your questions. This is one of the key responsibilities of the research lead. She needs to make sure that your questions lead to real business value. If he doesn’t think your questions will lead to insights, he might want to pull them off the question board so the data analyst doesn’t start searching for results.

Note: The research lead usually removes questions as part of the team’s question meetings. You don’t want your research lead pulling questions off the board without communicating the change to the team.

One of the key things about question trees is that they actually mirror how most teams come up with new questions. Remember that data science is using the scientific method to explore your data, which means that most of your data science will be empirical. Your team will ask a few questions, gather the data, and then they will react to that data and ask a series of questions. When you use a question tree, it reflects what the team has learned. At the same time, it shows the rest the organization your progress.

In my two previous posts, "The Data Science Life Cycle" and "Run Data Analytics Sprints," I present a six-stage framework to structure the work a data science team performs and five techniques for performing the work in intense, two-week cycles called data sprints. These techniques go a long way to making the data science team productive.

In this post, I call your attention to several pitfalls that commonly undermine the data science team's efforts, and I provide guidance on how to be proactive in avoiding these pitfalls. Generally, your data science team needs to squash anything that limits their mission to something other than exploration and discovery.

Many organizations create data science teams and then essentially tie their hands, preventing them from truly exploring the data. Much less frequently, organizations provide their data science teams with too much freedom, so the teams end up chasing data and questions that are irrelevant to the organization's success or getting so wrapped up in routine chores, such as managing the data warehouse, that they fail to produce anything of value. In most organizations, though, the problem involves a strict hierarchy that tries to control what the data science team does, and that is a formula for failure.

Prior to installing a data science team, an organization often must change its mindset and values. It must embrace a spirit of creativity and innovation, especially in respect to its data science team. When the team is doing what it should be doing, it is learning and helping the organization learn. It is discovering what the organization doesn’t know. Attempts to micro-manage the team run counter to its mission.

However, the data science team does need to deliver value. It should serve the needs of the organization. Data science teams can achieve that goal by being highly service-oriented and by collaborating with everyone across the organization to get their questions answered, help them overcome any challenges they face, and inform their decisions.

Most organizations still view work as a series of goals and objectives. They invest a great deal of time, money, and effort on planning, management, and compliance. Teams are expected to set goals in advance, formulate plans to meet those goals, execute their plans, and deliver the promised outcomes. While that approach works well for most teams, it is counterproductive for data science teams whose mission it is to explore and innovate. Data science teams need to follow the data and the questions, and they cannot shift direction if their path is carved in stone.

If you're on a data science team, you may feel as though your team is trying to hit a constantly moving target. Every sprint introduces new questions that may lead the team in a different direction. Sometimes, the team may not even know what the moving target is. The team may be looking for patterns in the data that reveal new targets. By working without objectives, the team has the flexibility it needs to let its curiosity and the data determine the outcomes.

Serendipity is a happy happenstance, such as striking up a conversation with the CEO of Microsoft at a Mariners game and having him offer you a job on the spot. It is an odd concept in the world of business, where strategy, goals, objectives, and planning are enshrined as the essential components of success.

However, more and more evidence points to the advantages of serendipity over goal setting and planning. One of the best books on the topic is Why Greatness Cannot Be Planned: The Myth of the Objective, by Ken Stanley and Joel Lehman. According to the authors, “Objectives actually become obstacles towards more exciting achievements, like those involving discovery, creativity, invention, or innovation.”

Data science teams are wise to capitalize on serendipity. For example, if a team member sees something unexpected and intriguing in the data the team is analyzing, the team needs to follow up on that discovery. You don't want your team focused on objectives at the expense of overlooking a groundbreaking discovery. Professor Stanley calls these “stepping-stones” — interesting things that eventually lead to insights. If you ignore them, you are likely to miss key discoveries.

When you're working on a data science team, it's easy to get so caught up in the data, analysis, exploration, and discovery that you lose sight of the organization's needs. Driven by innate curiosity to follow wherever the data leads, the team forgets that others in the organization are relying on it to deliver knowledge and insight that guide strategy and inform decision-making. Every couple weeks, the team delivers its reports or presentations, which the team finds fascinating but which leave everyone else in the organization wondering "So what?" or "Who cares?"

To avoid this pitfall, the data science team must engage, to some degree, in guided exploration. Three tools in particular are helpful for structuring and guiding the data team's work:

By its very nature, routine is repetitive, and it can become hypnotic, lulling you into a complacency that prevents you from noticing the wonderful world that surrounds you. The same is true for a data science team. It can become so wrapped up in capturing, cleaning, and consolidating data and creating data visualizations that it loses its sense of adventure. It falls into a rut and stops asking interesting questions. When looking at the data, it may not even notice an intriguing fact that's staring right back at them.

To avoid this pitfall, try the following techniques:

Keep in mind that your data science team should be committed to exploration, discovery, and innovation that's relevant to the organization's needs. If the team works toward achieving that mission, it will be less susceptible to the most common pitfalls.

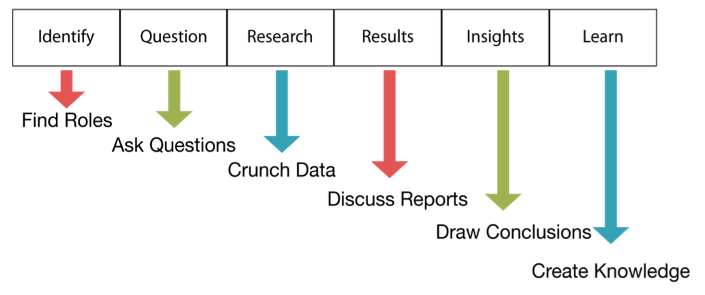

In my previous post, "The Data Science Life Cycle (DSLC)," I encourage you to adopt a structure for your data team's activities that is conducive to the type of work it does — exploration. I refer to this structure as the Data Science Life Cycle (DSLC), illustrated below.

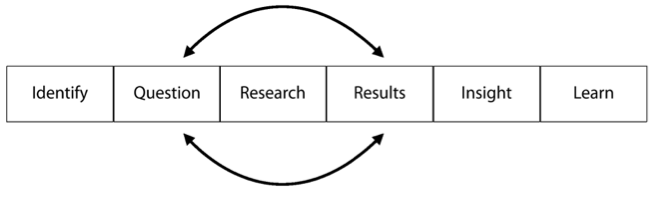

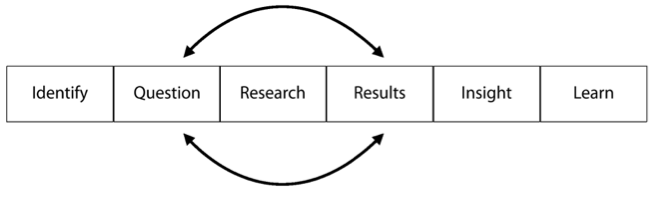

At first glance, DSLC appears to be a linear process, starting with identification and ending with learning, but the process is actually cyclical. Learning leads to more questions that return the team to the beginning of the process. In addition, mini-cycles often form within the DSLC as research and analysis results prompt questions that require additional research and analysis to answer, as shown below.

In this post, I drill down to illustrate how data science teams can function more effectively and efficiently within the DSLC framework by employing the following techniques:

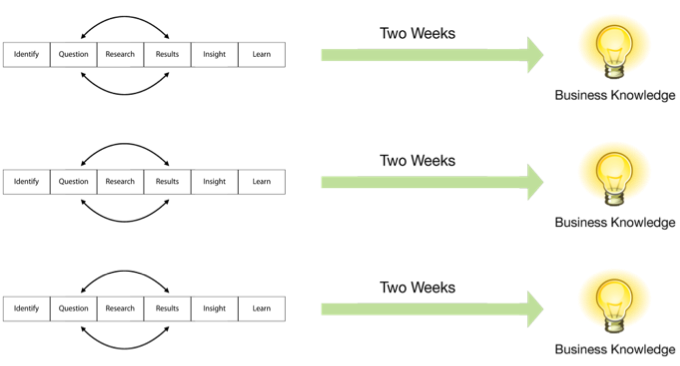

The DSLC isn’t designed to cycle over a long period of time. Two weeks is sufficient for a cycle (a sprint). That gives the team sufficient time to prepare and analyze the data and compose a story that reveals the knowledge and insight extracted from the data and its significance to the organization. With short cycles, if a specific line of enquiry proves fruitless, the team can change course and head in a different direction or tackle a new challenge.

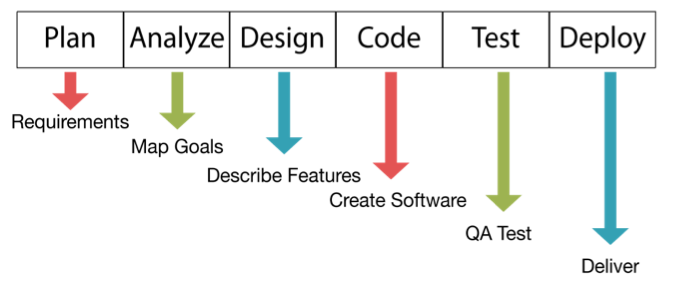

You may have heard of sprints in the context of agile software development methodologies, such as Scrum, but the term actually originated in product development. A sprint is a consistent, fixed period of time during which the team runs through an entire lifecycle. Each sprint should run through all six stages of the DSLC, as shown below.

As I explained in an earlier post, "Data Science Team Roles," teams should be small (four to five individuals) and include a research lead, data analyst, and project manager. Although every member of the team should be asking compelling questions, the research lead is primarily responsible for that task.

One of the most effective ways to inspire and share interesting questions is via a question board— usually a large whiteboard positioned near the data science team on which team members and others in the organization post questions or challenges. The board should have plenty of open space with a short stack of sticky notes in one of the corners. You may want to include a large arrow pointing down to the stack of sticky notes with the caption, “Ask a question.”

The question board should be open to everyone in the organization, including the research lead, other data science team members, executives, managers, and employees. Try to make your question board look as enticing as possible. Anyone in the organization should be able to walk by, grab a sticky note, and post a quick question.

Given only two weeks to complete each sprint, your data science team should limit the amount of time it spends in meetings and keep those meetings focused on a specific purpose. I recommend that teams conduct five meetings over the course of a two-week sprint, each with a specific purpose and a time limit that the team agrees upon in advance:

Breaking down your work involves allocating a sufficient time to all six stages of the DSLC. What often happens is that data science teams get caught up in the research stage — specifically in the process of capturing, cleaning, and consolidating the data in preparation for analysis. Given only two weeks per sprint to deliver a story, the data science team has little time to prep the data. Like agile software development teams, the data science team should look to create a minimally viable product (MVP) during its sprint — in the respect to data science, this would be a minimally viable data set, just enough data to get the job done.

Remember, at the end of a sprint, stakeholders in the organization will want to know "What do we know now that we didn't know before?" If your team gets caught up in data prep, it won't be able to answer that question.

Organizations that make significant investments in any initiative want to see a return on investment (ROI), typically in the form of a deliverable. In the world of data science, the deliverable is typically in the form of an interesting story that reveals both the meaning and the significance of the team's discoveries. Unlike a presentation or data visualization, which merely conveys what the team sees, a story conveys what the team believes. A good story provides context for understanding the data, along with guidance on how that understanding can benefit the organization.

An effective story accomplishes the following goals:

A project lifecycle can be a useful tool for structuring the process that a team follows. (A lifecycle is a repeating series of steps taken to develop a product, solve a problem, or engage in continuous improvement.) It functions as a high-level map to keep teams moving in the right direction. Although data science teams are less goal-oriented than more traditional teams, they too can benefit from the direction provided by a project lifecycle. However, traditional project lifecycles are not conducive to the work of data science teams.

In this post, I discuss two more traditional project lifecycles and explain why they are a poor fit for data science "projects." I then present a data science life cycle that is more conducive to the exploratory nature of data science.

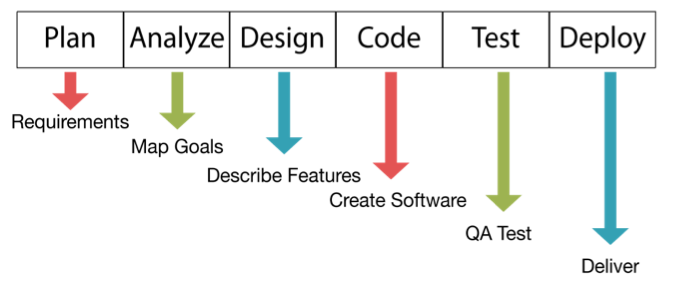

The software development lifecycle (SDLC) has six phases as shown below. Under each phase is an example of an activity that occurs during that phase. This is typically called the waterfall model because each one of these phases has to be complete before the next can begin.

SDLC works well for software development because these projects have a clearly defined scope (requirements), a relatively linear process, and a tangible deliverable — the software. However, this same lifecycle is poorly suited for data science, which has a very broad scope, a creative and often chaotic process, and a relatively intangible deliverable — knowledge and insight.

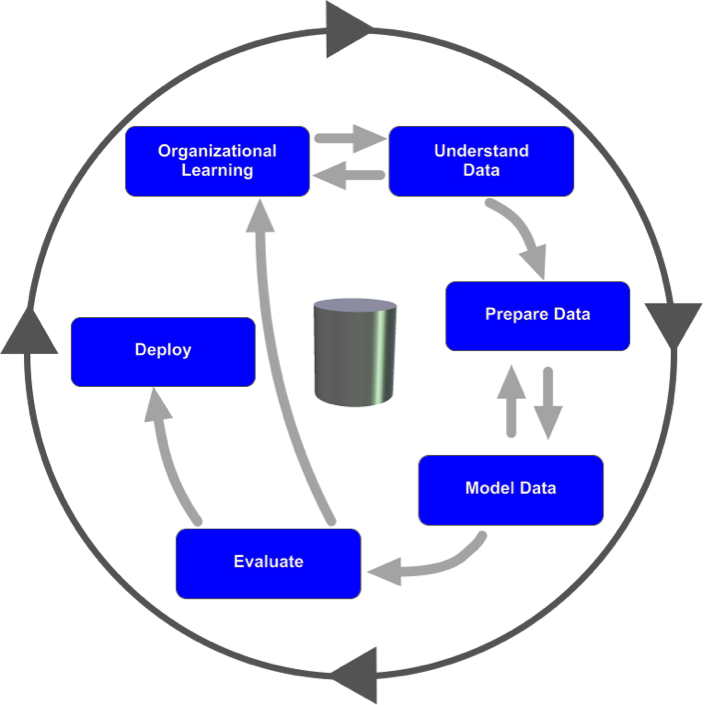

The Cross Industry Standard Process for Data Mining (CRISP-DM) lifecycle, which is used for data instead of software, is considerably more flexible than the waterfall model. It also has six phases, as shown below. The various phases aren't necessarily sequential, and the process continues after deployment, because learning sparks more questions that require further analysis.

CRISP-DM works much better for data science than does SDLC, but, like SDLC, it is still designed for big-bang delivery — deployment. With either model, the data science team is expected to spend considerable time in the early stages — planning and analyzing (for software development) or organizational understanding (for data mining). The goal is to gather as much information as possible at the start. The team is then expected to deliver the goods at the end.

For a data science team to be flexible and exploratory, they can't be forced to adopt a standard lifecycle. A more lightweight approach is necessary to provide the structure necessary while allowing the team to be flexible and shift direction when appropriate.

The fact that traditional project lifecycles are not a good match for data science doesn't mean that data science teams should have complete operational freedom. These life cycles are valuable for structuring the team's activities. With a general sense of the path forward, the team at least has a starting point and some procedures to follow. A good lifecycle is like a handrail; it's there to provide support, but it's not something you need to cling to.

The approach that seems to work best for data science teams is the data science life cycle (DSLC), as shown below. This process framework, based loosely on the scientific method, is lightweight and less rigid than SDLC and CRISP-DM.

Like the two project life cycles presented earlier in this post, DSLC consists of six stages:

DSLC isn't always or even usually a linear, step-by-step process. The data science team should cycle through the questions, research, and results, as shown below, whenever necessary to gain clarity.

Some organizations that have strong data science teams already follow this approach. For example, the video subscription service Netflix used this approach to create their hit series “House of Cards.” They had 33 million subscribers at the time. Their data science team looked at what customers were watching, ratings of shows, what plots viewers liked, and the popular actors (Kevin Spacey was very popular at the time). Netflix determined that political shows were very popular and hired Spacey. Then they modeled the new show on the popular British version of the program.

The Netflix team used data science to develop the idea for the show. They created a predictive model based on analysis of viewer demand. They worked to cycle through questions, research, and results. They then created a story of what their customers would like to see. That story became an actual story that turned into a hit television program.

This cycle of question, research, and results drives insights and knowledge. The data science team loops through these areas as part of the larger DSLC. Remember to not think of this lifecycle as a waterfall process. Instead, think of it as a few steps to start and then a cycle in the middle to churn out great stories at the end.

In a previous post, "Data Science vs Software Engineering Projects," I point out some of the key differences between traditional projects and data science projects. One of these differences is in the deliverables. With traditional projects, the deliverables are often tangible — a new product, a specified increase in revenue or reduction in costs, adoption of a new information system, and so on. With traditional projects, organizations can set objectives and milestones and measure progress. Determining whether a team succeeded or failed in achieving its mission is relatively easy; either they delivered the goods, or they didn't.

With data science project success, the deliverables are less tangible — less quantitative and more qualitative. Data science teams engage in discovery with the goal of growing an ever-expanding body of valuable organizational knowledge and insight. How do you measure that? Their purpose is to provide knowledge and insight that enables executives to formulate more effective strategies and tactics and enables everyone in the organization to make better, data-driven decisions. How do you set a timeline for that?

Although setting objectives and measuring output are not conducive to data science, organizations still need a way to measure data science success. After all, the executive team needs proof that the data science team is earning its keep — that the return on investment is worth it.

At one point in his illustrious career as inventor, Thomas Edison noticed that one of his assistants was becoming discouraged by their failed experiments. Edison, on the other hand, remained upbeat. He assured his assistant that they hadn't failed. With each failed experiment, they were learning something new. To Edison, even a failed experiment was a success.

Retrospectively, we measure Edison's success by his many useful inventions, most notably the incandescent light bulb, the phonograph, and the motion picture camera. What we don't remember are his many failures, including the electric pen, the magnetic iron ore separator, and the concrete piano. When confronted about his many failures, Edison replied, "I have not failed. I've just found 10,000 ways that won't work."

Success in data science should be measured the same way Edison measured his success — by the learning his team captured and by the innovations it delivered. Data science teams often encounter dead ends. Experiments may not support the team's hypothesis. Insights gained may be of little to no value to the organization. However, as long as the team learns from its failures, those are a measure of success, as well.

Many organizations struggle with this approach. More goal oriented teams may look over at the data science team and wonder, “What is that team doing?” Or even worse, “What does that team do again?” And if the data science team was formed as an experiment to see what it could come up with, and it fails to deliver anything of value in whatever window of time it was granted, the team may be disbanded before it even has a chance to produce.

Here are a few suggestions for improving your data science team's chances of acceptance and success:

I once worked for a university that hired a group of “unstructured data specialists.” The Provost wanted a data science team that looked for new insights. The team operated out of an office near the administrators who hired them. Few others in the university knew what the team was doing or were even aware of its existence. Due to the lack of clarity and understanding of the team's purpose, nobody in the university would make the time to meet with the research lead. Without input from the people who were most responsible for executing the university's mission, the team struggled to ask compelling questions and, hence, was unable to deliver any valuable insights.

The team's chances of success would have been greatly improved had it been placed in a location closer to the faculty instead of the administrators and steps taken to inform the faculty of the team's purpose and its potential for bringing value to the university. Making the team more transparent and accessible would have encouraged others to bring their questions and challenges to the team. Regular storytelling meetings would have enabled the team to share its knowledge and insights and celebrate its wins, thus publicizing the team's value.

If you’re the project manager on a data science team, work hard to make sure that the team is sitting with everyone else. Some of your best inspirations might come from people dropping in and asking questions. The better connected the team is with the rest the organization, the easier it will be to come up with compelling questions that lead to flashes of innovation.

In a previous post, "Data Science Projects," I point out some of the key differences that separate data science from traditional project management. While traditional project management is focused more on goals, planning, and tangible deliverables, data science is a more open-ended operation with the focus on discovery and innovation — less tangible, but no less valuable, deliverables.

To arrive at a deeper understanding of the differences between traditional project management and data science, consider the unique challenges of a data science project:

Let’s look at a traditional project and compare it to what a data science team does. Then, we'll look at what often happens when traditional project management is applied to a data science team.

Consider a typical software project. Your organization wants to develop a human resources (HR) self-help portal for its employees. The project charter is to create the portal as a way to lower costs and improve overall employee satisfaction. The project will have a set cost, but the organization will save money by reducing HR costs and employee turnover. The estimated return on investment (ROI) for this project is substantial. The plan lays out all the features in a requirements document and includes a development schedule and detailed budget. The project manager will oversee development and update the plan to account for any changes in schedule, budget, or product requirements.

In contrast, consider how a data science team operates. The team is small — four to five people, including a research lead, a couple data analysts, and a project manager. Their "mission" is to help the organization come to a better understanding of the customers’ needs and behaviors in the hopes that this deeper understanding reveals opportunities to generate more revenue.

The research lead starts by asking questions such as these:

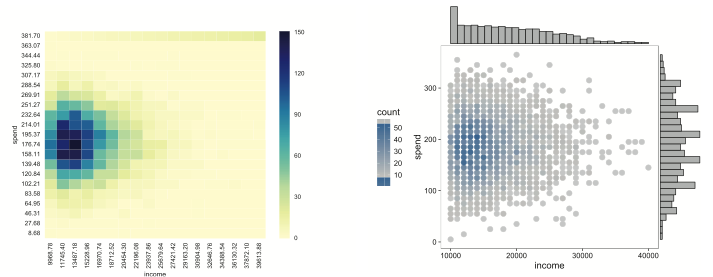

The data analysts do their job — analyze the data — to come up with answers to these questions. They deliver the answers in the form of data visualizations — graphic summaries of the data. For example, the data visualizations may be graphs that shed light on customer income and spend, as shown here. The x-axis (horizontal) represents income, and the y-axis (vertical) represents spending. Note that customers with higher incomes don’t necessarily spend more. Those who have an income around $20k–$30k seem to spend the most.

The analysts could also look at data from social media platforms and create a word cloud of feedback from thousands of customers, as shown below. For example, some of the largest words in the word cloud are “travel,” “recipe,” and “restaurant.”

Based on the knowledge and insight gleaned from these data visualizations, the team is likely to ask more questions, such as "Why do customers in a certain income bracket spend more than customers in higher or lower income brackets?" and "Why do our customers like to travel?" and "When our customers travel, where are they most likely to go?"

As you can imagine, knowing more about customers can lead to higher sales. The team could then share its discoveries with others in the organization. Marketing may decide to advertise more in travel magazines. Product development may shift its focus to products that are more closely related to travel. Sales might focus more if its efforts toward customers in a specific income bracket.

Then again, the team may hit a dead end. A data visualization created to analyze spending patterns among customers who travel and those who don't is inconclusive, as shown below. It reveals only that customers who travel outspend, by a relatively small margin, those who don't travel and that customers who do travel visit a variety of destinations around the world and the total spend by customers who travel to those destinations is no greater than the total spend by customers who don't travel. The data visualization doesn't provide sufficient evidence to support a change in what the company is doing, so the team abandons this line of enquiry and shifts direction.

Imagine trying to shoe-horn data science into a traditional project management framework. How would you define the scope of the project when your exploration can lead you in so many different directions? How can you meet predetermined milestones when you're building an ever-increasing body of knowledge and insight about your customers? How can you possibly meet a deadline when you don't know, specifically, what you're looking for? How do you budget for time when you have no idea how long it will take to find the answers?

Data science is all about learning, and "learning" is a verb. Specifically, it is a verb in the form of a present participle, which conveys continuous action. Data science is engaged in ongoing discovery and innovation. It doesn't conform to the traditional project management framework. Don't try to force it to.

In my previous post, "Data Science Projects," I pointed out the differences between project management and data science. These differences are summarized in the following table:

| Project Management | Data Science |

| Planning | Exploring and experimenting |

| Goals and objectives | Discovery and knowledge |

| Schedule- and budget-driven | Data-driven |

| Certainty | Curiosity |

| Execution | Innovation |

You can see how these differences play out when comparing traditional software projects to typical data science projects, as presented in the following table. While traditional software projects are focused more on achieving a goal and delivering an end product, data science projects are more exploratory and open ended. Both have deliverables, but with software projects, the deliverables are more tangible and deadline-oriented, whereas data science tends to deliver a less tangible and ever growing body of knowledge and insights, which may be of even greater value to the organization.

| Traditional Software Project | Typical Data Science Project |

| Develop a new customer self-help portal | Better understand a customer’s needs |

| Create new software based on customer feedback | Create a data model to predict churn |

| Install a new server farm to increase scalability | Discover new markets and opportunities |

| Convert legacy code into updated software | Verify assumptions about customer behaviors |

Despite their differences, software project management is fast becoming more like data science with the growing popularity of agile software development methodologies, such as Scrum, Extreme Programming (XP), Lean and Kanban, and Dynamic Systems Development Method (DSDM).

Like data science, many of these newer software development methodologies follow the scientific method, at least to some degree. That is, they often begin with research to assess the customer's (end user's) needs, and they build the software gradually in multiple, iterative cycles (commonly referred to as "sprints"). Team members are encouraged to experiment during these cycles to innovate and build knowledge that the team can draw on to achieve continuous improvement, both in the product being developed and the process used to create that product.

In many cases, the software development cycle is never-ending — the software is in continuous development, improving continuously with each development cycle and with each new release. As with data science, the focus is more on the process than the product and is open-ended — a never-ending cycle of building knowledge and insight and driving innovation. In the case of software development, this knowledge and insight is applied to continuously improve the software. With data science, the knowledge and insight is applied to continuously improve the organization.

Spotify, the digital music, podcast, and video streaming service, follows this same iterative approach in the development of its platform. The company nurtures a creative, failure-friendly culture, as reflected in its values:

Spotify's approach to software development is rooted in the Lean Startup approach of "Think it, build it, ship it, tweak it." The organization even hosts "hack days" and "hack weeks," encouraging its development teams (called "squads") to spend ten percent of their time building whatever they want with whomever they want.

Squads are given a great deal of creative license to develop and test new features with the condition that they try to "limit the blast radius." They accomplish this by decoupling the architecture to enable each squad or "tribe" (a collection of squads) to work on an isolated part of the platform, so any mistakes are limited to that part; and by rolling out new features gradually to more and more users.

Spotify also places an emphasis on "capturing the learning." Teams experiment with new tools, features, and methods and then discuss the results to figure out ways to improve both product and process. They document what they learn and share it with other teams, so everyone in the organization is better equipped to make data-driven decisions instead of decisions driven by authority, ego, or opinion.

Organizations would be wise to follow Spotify's lead not only in developing new software but also in managing their data science teams — or, even better, in allowing and enabling the data science teams to manage themselves. Your organization's data science team should feel free to ask questions, challenge assumptions, formulate and test their own hypothesis, and cross pollinate (reach out to others in the organization for insight and feedback). The team's mission should be more about exploration, innovation, and discovery than about setting goals, meeting milestones, and staying on budget or on schedule.

The heartbeat of most organizations can be measured in projects. Various teams across the organization set goals and objectives, develop plans for meeting those goals and objectives, and then implement those plans in the hopes of executing their missions on schedule and on budget. Project management has been the shiny hammer that has helped to nail down costs and meet deadlines throughout the process. It has been so successful that organizations often rely on project management even when it’s poorly suited for a given activity, as is the case with creative endeavors.

Data science is one area in which project management is a poor match. Data science projects often operate without clearly defined goals or objectives. Their primary purpose is to explore — to mine data for organizational knowledge and insights. Of course, sometimes, they have a clear objective — a specific question to answer or problem to solve or a data-driven software solution to develop, such as developing a machine learning algorithm to automate a specific task. To accomplish clearly defined tasks, project management may help even in the realm of data science, but for the most part, data science functions better with less goal-oriented management.

By its very nature, data science is empirical; that is, it relies more on observation and experience than on theory and logic. Data science projects are primarily exploratory and data-driven, not schedule- or budget-driven. One day, a data science team may be mining the data to identify new opportunities. Another day, it may be looking for ways to better understand the organization’s customers or to more accurately detect signs of data breaches or fraud. These efforts don’t fit into a typical project management framework. Data science teams are often operate outside the scope of other functions in the organization and often explore data that’s outside the scope of what the organization captures on its own.

When you set out on an exploratory mission, you don’t know specifically what you’re going to find. The entire purpose of the mission is to uncover what is currently unknown — to unlock the secrets hidden inside the data. Data science teams celebrate those eureka! moments, when they stumble upon unexpected discoveries. To maximize their discoveries, data science teams must be able to react to the data. They must be allowed to follow where the data leads and change course when questions point them in a new direction. If they knew exactly what to expect, they wouldn’t be gaining any new knowledge.

In general, data science looks for new opportunities or challenges current assumptions. It focuses on knowledge exploration and tries to deliver insights. It’s not about cranking out deliverables on a predetermined schedule.

The difference between data science and project management is like the difference between exploring and planning. Imagine yourself exploring an unfamiliar area to find a restaurant. This would be an empirical process, similar to the approach a data science team would take. You would tour the area checking out different restaurants and their menus. You might even step inside the restaurants to check out their ambience and cleanliness and the friendliness of the staff and compare prices.

While you are exploring restaurants, you work up an appetite. You’re famished. Now, you need to decide what you’re hungry for, where and when you want to eat, how much you want to spend, and so on. You may even want to contact someone you know to meet you at the restaurant. In this scenario, you have a specific goal in mind — enjoying your next meal. To achieve that goal, some degree of planning is required. You switch from learning to planning, from data science to project management.

I once worked for an organization that tried to apply sound project management practices throughout the organization. The data science team was no exception. The team tried to adhere to the new policies by creating knowledge milestones and insight deliverables. Unfortunately, this particular experiment was a disaster. The knowledge milestones were imaginary constructs based on what the team already knew. They kept the team from exploring anything outside the scope of those milestones. Time constraints drove the team to focus on hypotheses that were easily proved or bordering on the obvious. Whenever someone ventured to ask an interesting question or attempted to challenge an assumption, that person was shut down because the team was afraid of missing a milestone.

Keep in mind that project management is beneficial to most organizations. Unfortunately, the same approach can have a chilling effect on a data science team. Project management discourages curiosity and uncertainty. It forces the data science team to merely try to verify what is already known. If they find anything unexpected, they dismiss it as a minor anomaly or a glitch instead of as a sign that they need to change direction or dig deeper for the truth.

By setting milestones and defining specific deliverables, you gamify the data science process in a counterproductive way. You end up rewarding the data science team for the wrong achievements. Instead of rewarding curiosity, questioning, and experimentation, you’re rewarding the team for verifying what’s already known.

Bottom line: Don’t think of data science as a project delivering a product. Think of it as exploration for driving discovery and innovation.

Large organizations have numerous departments or teams that perform different functions, including Research and Development (R&D), Production, Purchasing, Marketing, Human Resources (HR), and Accounting and Finance. While many teams respond well to traditional management techniques, including setting milestones and reporting their progress, data science teams do not. Their purpose and function is more akin to intelligence agencies, such as the CIA, than to typical business units. Their success isn’t measured in milestones or productivity but in knowledge and insight. As a result, they need to be “managed” in a different way.

The Wrong Way to Manage a Data Science Team

In many organizations, teams are focused on setting and meeting goals and objectives. Managers spend most of their time planning, monitoring, and correcting to ensure compliance. They have quarterly budgets and monitor them closely. They look for cost or schedule variances. If they notice deviations from what’s expected, they track down and address the cause(s) or consult with the executive team for guidance.

This approach to productivity isn’t well suited for data science teams, because their work is primarily exploratory. The data science team asks compelling questions, gathers and analyzes data, develops theories (hypotheses), and conducts experiments to test its theories. Their “product” is an ever-expanding body of organizational knowledge and insights along with, perhaps, data-driven tools to automate and optimize certain tasks.

The Right Way to Manage a Data Science Team

Some organizations, such as pharmaceutical or high-tech companies, are accustomed to working scientifically. They are engaged in a constant cycle of insight — gathering and analyzing data, asking questions, formulating hypothesis, and testing those hypotheses through experimentation. For most companies, however, exploratory work is a foreign concept, and having a data science team engaged in exploration to create new knowledge just doesn’t seem natural.

In “rank-and-file” companies, getting a data science team up and running is especially challenging. You can expect to encounter institutional pressure to maintain separation between the business and the technology of data science. You can also expect a strong push to place a compliance manager (a project manager or director) in charge of the team. Either of these two approaches would significantly slow the pace of discovery.

In a previous post, “Building a Data Science Team,” I recommend creating a small team of three to five individuals, including a research lead, data analyst, and project manager. I also recommend adding people to the team on a temporary basis from different parts of the organization to benefit from different perspectives. This team should be given some level of autonomy, so it feels free to explore, but it should also work closely with other stakeholders to ensure that it serves the organization’s business intelligence needs.

I once worked for an organization that didn’t see the value of having a research lead on the team. They stacked the team with data analysts, who were expected to deliver monthly insights to the business manager who would then decide which insights to act on. The business manager had her own budget and wasn’t really interested in digging into the data. Her primary focus was to comply with her budget constraints. The data analysts had very little insight into the business, so they weren’t geared to ask compelling questions. As a result, the two teams functioned independently, never harnessing the power of the organization’s data.

Another company tried putting a project manager in charge of the data science team. His focus was on ensuring that the team met its objectives, and he developed different ways to measure the team’s output. At one point, he turned questions into tasks and then measured how well the team completed its tasks. This approach failed, because the questions led to more questions, which resulted in a growing list of tasks. The more the data science team did, the more it had to do, so deadlines kept slipping. His goal was to have the data science team complete as many tasks as possible, which doesn’t align with the value proposition of a data science team — to deliver valuable business intelligence.

Tips for Installing and Managing a Productive Data Science Team

Here are a few tips for making a traditional organization more agile and data-driven:

One of the biggest challenges your data science team is likely to encounter is gaining access to all of the organization’s data. Many organizations have data silos— data repositories managed by different departments and isolated from the rest of the organization.

The term “silo” is borrowed from agriculture. Farmers typically store grain in tall, hollow towers called silos, each of which is an independent structure. Silos typically protect the grain from the weather and isolate different stores of grain, so if one store is contaminated by pests or disease all of the grain isn’t lost. Data silos are similar in that each department’s database is separate; data from one department isn’t mixed with data from another.

Data silos develop for various reasons. Often they result from common practice — for example, human resources (HR) creates its own database, because it can’t imagine anyone else in the organization needing its data or because it needs to ensure that sensitive employee data is secure. Data silos may also arise due to office politics — one team doesn’t want to share its data with another team that it perceives to be a threat to its position in the organization.

If your data science team encounters a data silo, it needs to find a way to access that data. Gaining access to data is one of the primary responsibilities of the project manager on the data science team. After the data analyst identifies the data sets necessary for the team to do its job, the project manager needs to figure out how to gain access to that data.

Although data silos may be useful for protecting sensitive data from malware and from unauthorized access, they also cause a number of problems, including the following:

I once worked for an organization that was trying to migrate all its data to a central data warehouse. They felt that the organization wasn’t getting enough insight from its data. The organization had just gone through a data governance transformation and wanted to govern how the data was controlled in the organization.

When they finally got into their data, they realized how much was locked away in silos that no one knew about. Over many years, each department had created its own processes, schemas, and security procedures. The organization wanted to get value from this data, but that data was stored on different servers across the entire company. To compound the problem, the various departments were reluctant to share their data. It was as if the project manager was asking them to share toothbrushes.

One of the first steps toward becoming a data-driven organization is to break down the data silos:

By breaking down data silos, you give everyone in your organization self-serve access to the data they need to do their jobs better.

If you’re a project manager on a data science team, try to keep the following key points in mind: